You can drive immediate business impact by adopting data standardization best practices. Consistent, high-quality data supports better decision-making and smoother operations. Many organizations face costly downtime and inefficiency due to poor data quality, such as:

Automated tools like FineDataLink help you streamline standardization and improve data quality across your systems.

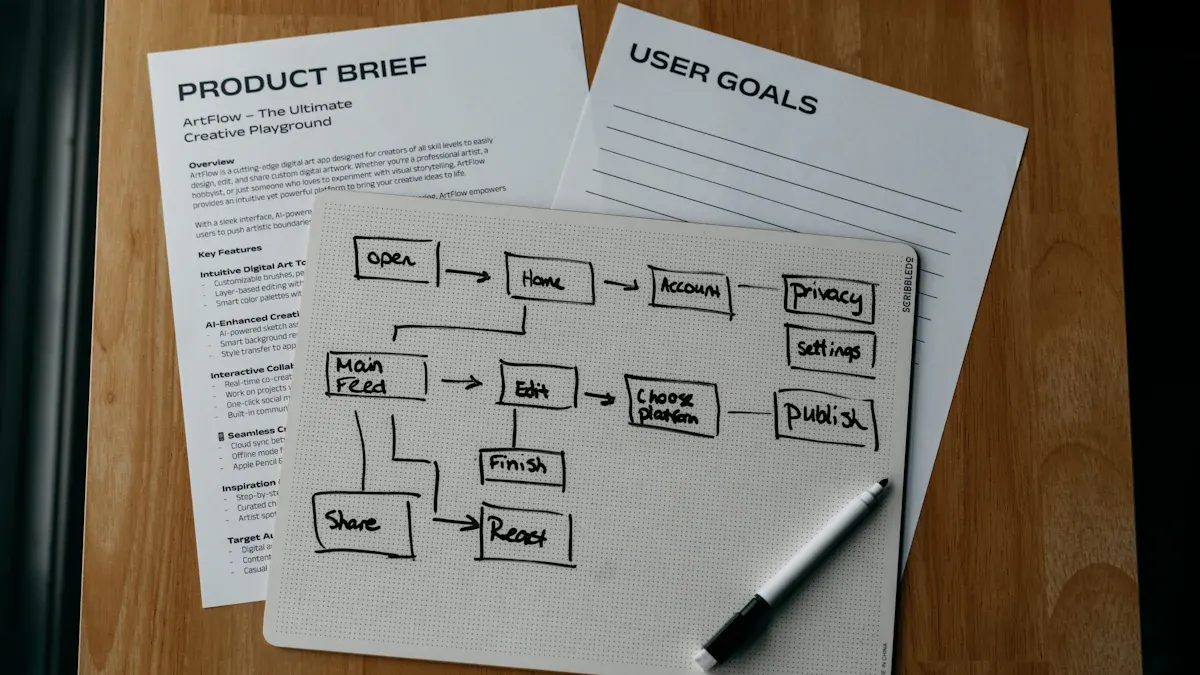

Setting clear goals is the first step when you want to standardize data in your organization. You need to know what you want to achieve and who should be involved. This foundation will help you measure progress and keep everyone aligned.

Start by identifying what you want to accomplish when you standardize data. Many organizations aim to ensure all information follows consistent formats and definitions. This reduces errors and inconsistencies. Reliable data supports better analysis and decision-making. You also improve operational efficiency when you remove redundancies and errors.

Some common objectives include:

When you standardize data, you create a strong base for your business intelligence efforts. You can use modern tools to automate these processes, which saves time and reduces mistakes.

Tip: Write down your objectives and share them with your team. Clear goals help everyone understand the value of data standardization.

You need to involve the right people to make your data standardization project successful. Each stakeholder brings unique expertise and responsibilities. The table below shows typical roles and their main duties:

| Stakeholder Role | Primary Responsibility |

|---|---|

| Functional Leaders | Use Case Definition and Process Integration |

| Head of Data Science/Analytics | Analytical Capability Development |

| Head of Data Engineering | Data Infrastructure and Operations |

| Head of Data Governance | Data Management and Compliance |

| Chief Legal Officer/General Counsel | Legal and Regulatory Compliance |

| Chief Human Resources Officer | Talent and Culture Development |

| Chief Risk Officer | Risk Management and Controls |

Involving these stakeholders early ensures you address all technical, legal, and business needs. Their input helps you choose the right tools and set realistic expectations for your project.

Mapping your data sources is a critical step in data standardization. You need to know where your data lives, how it moves, and what condition it is in before you can apply any standards. This process helps you uncover hidden issues and prepares you for a smoother transition to standardized data.

Begin by auditing all systems that store or process your data. List every database, application, and file storage location. Evaluate how data loads into each system. Check if you need to update existing data before moving it to a new environment. Create a plan to confirm that your data loads are both accurate and complete. Pay special attention to how you manage sensitive information.

Common challenges during this stage include incorrect mapping of data, which often happens when business teams are not involved. Poor data quality can cause delays and force you to redo work. Undefined data ownership and unclear approval processes can also slow progress. To avoid these issues, involve business users early and define clear roles for data management.

Testing is essential. Use unit testing to check individual data elements, integration testing to see how data flows between systems, system testing to validate the entire process, and acceptance testing to ensure everything meets your requirements.

Assessing the quality of your data ensures that you start with a strong foundation. Focus on key metrics such as completeness, consistency, and validity. Make sure your data is available when needed and that each record is unique. Check for accuracy to ensure your data reflects real-world values. Timeliness matters, so verify that your data is up to date. Precision and usability also play important roles in making your data easy to understand and apply.

You can use specialized tools to automate parts of this assessment, making the process faster and more reliable. By mapping your data sources and assessing their quality, you set the stage for successful data standardization.

Establishing strong data standardization best practices is essential for any organization that wants to improve data quality and achieve reliable results. You need to create clear documentation, use effective tools, and automate processes to ensure consistency and normalization across all your data sources.

You should start by documenting your data standardization best practices. Clear documentation helps everyone in your organization follow the same rules and reduces confusion. When you define standards, you make it easier to maintain consistency and normalization in your data.

A well-documented approach includes several key components. The table below outlines what you should include in your documentation:

| Key Component | Description |

|---|---|

| Uniform Data Definitions | Ensures consistent definitions of terms and metrics across the organization. |

| Standardized Data Formats | Specifies formats for data entry and storage to prevent inconsistencies. |

| Data Quality Metrics | Sets benchmarks for data accuracy, completeness, and timeliness. |

| Single Source of Truth | Structures data so all business units access the same datasets, minimizing conflicting information. |

| Consistent Reporting | Aligns reports across departments, fostering trust in the data. |

| Reliable Analysis | Provides confidence to analysts that the data is accurate and consistent. |

| Data Integrity | Maintains data integrity, reducing errors and redundancies. |

| Improved Decision-Making | Offers a reliable foundation for informed decisions, leading to better outcomes. |

| Operational Efficiency | Streamlines operations, reducing time and effort in data management. |

| Enhanced Collaboration | Facilitates smoother collaboration as all units adhere to the same data standards. |

You should also establish naming conventions and validation rules as part of your data standardization best practices. These steps help you achieve consistency and normalization in your data. Here are some benefits of using naming conventions and validation rules:

Validation and quality control measures are also important. You need to validate your data against predefined rules, check for completeness and accuracy, and conduct regular quality checks. These data validation processes ensure that your standardized data meets your organization’s standards. When you use popular formulas to standardize data, such as min-max normalization or z-score normalization, you further improve consistency and data quality.

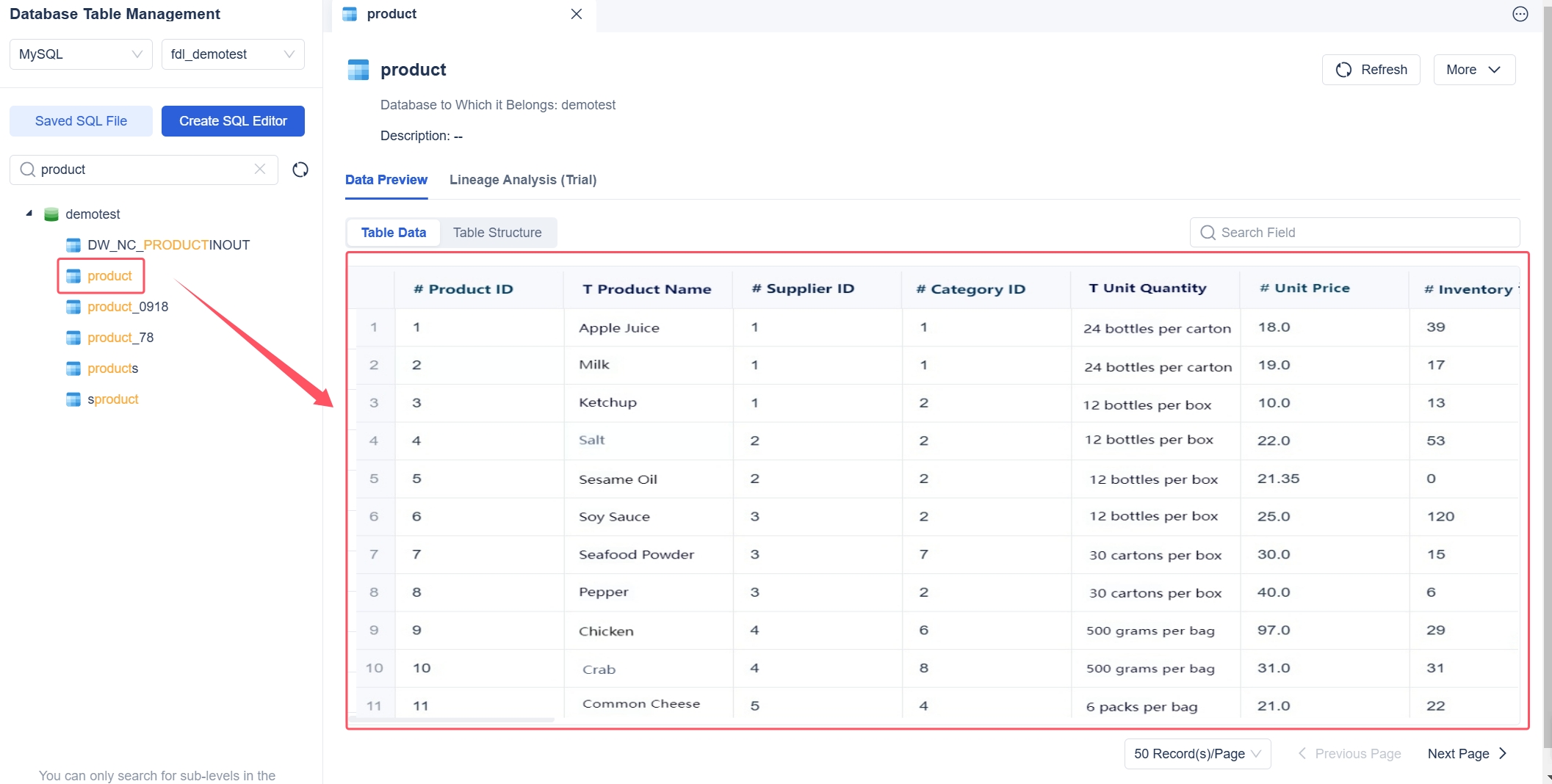

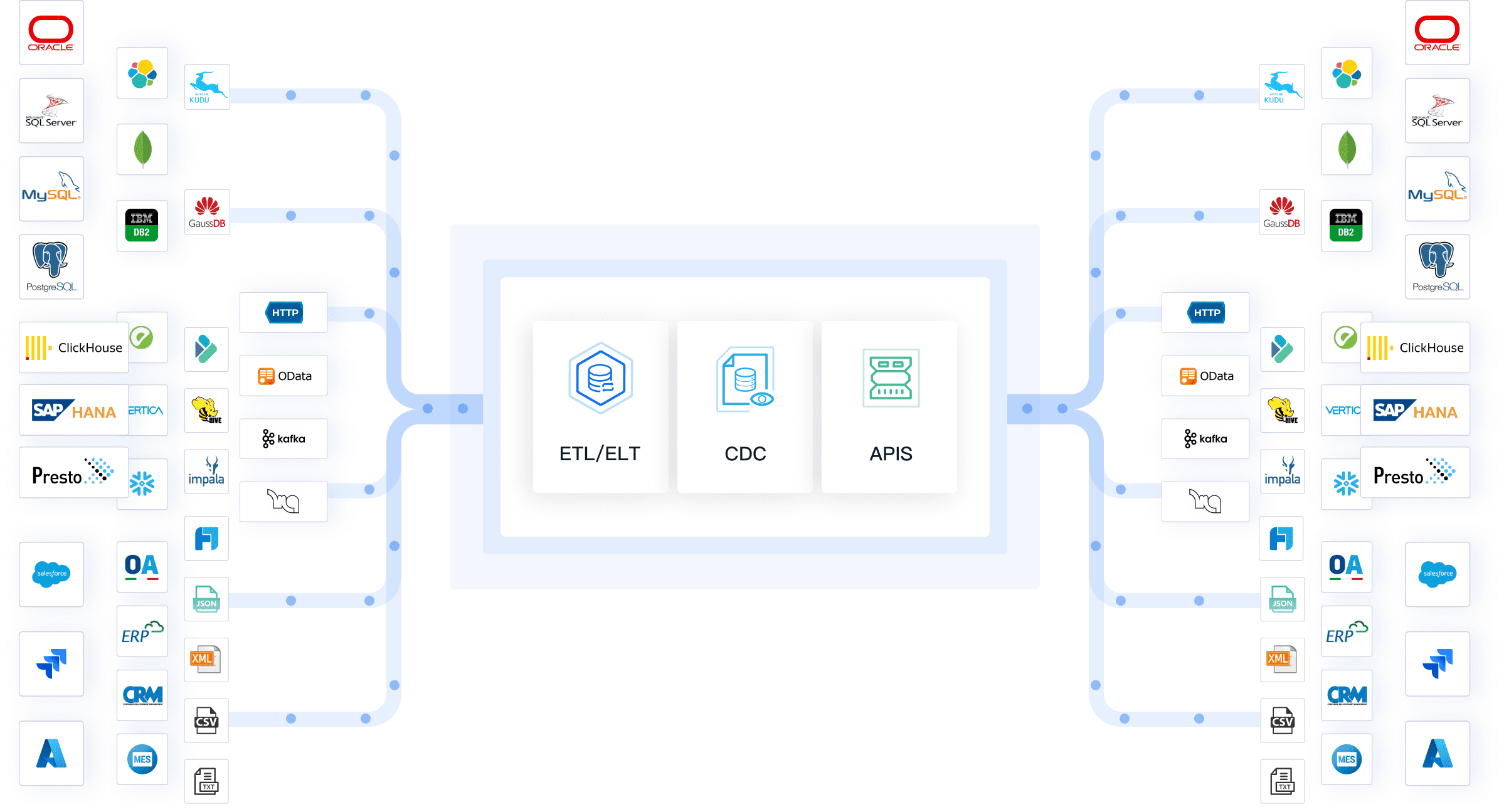

Automating your data standardization best practices can save you time and reduce manual errors. FineDataLink offers a powerful platform that helps you automate normalization, data preprocessing, and integration tasks. You can use FineDataLink to handle complex data management needs with ease.

FineDataLink provides several features that support automation and real-time synchronization. The table below highlights some of the key features:

| Feature | Description |

|---|---|

| Flexible data processing | Offers various data transformation operators for normalization and data preprocessing. |

| One-click parsing | Parses semi-structured data, such as JSON, with a single click. |

| Spark SQL support | Expands coverage of data transformation scenarios for advanced normalization. |

| Loop container | Supports looping through data for repetitive normalization tasks. |

| Dual-core engine | Enables efficient data development with both ETL and ELT processes. |

| Multiple synchronization methods | Provides five data synchronization methods based on different criteria. |

| Low-code platform | Simplifies complex data integration for real-time synchronization and normalization. |

| Task management | Supports database migration, backup, and real-time data warehouse creation. |

| Intelligent SQL editor | Allows quick task arrangement through a user-friendly interface. |

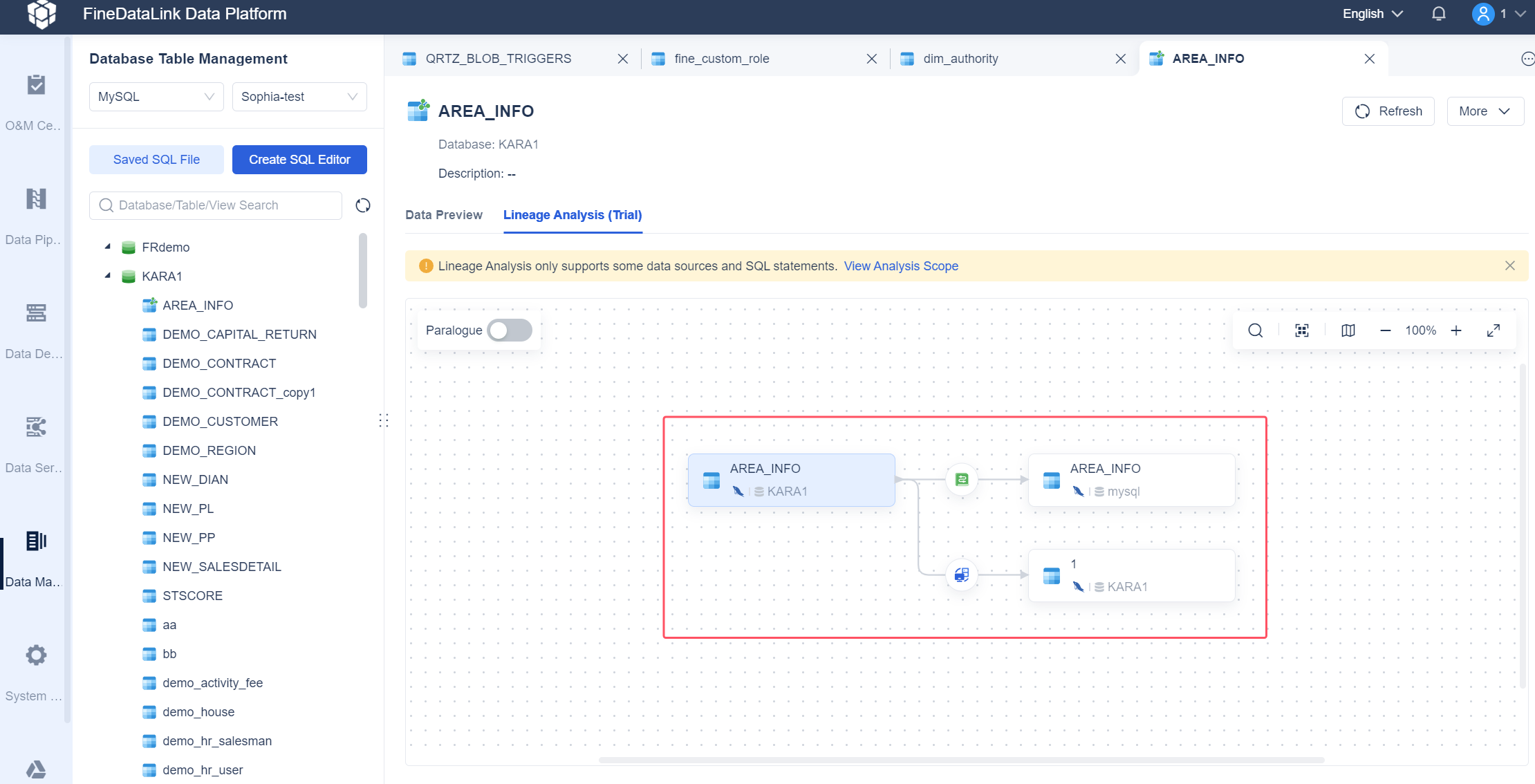

You can use FineDataLink to synchronize data across multiple databases and systems. The platform supports general synchronization nodes, data cleansing calculations, and drag-and-drop task arrangement. You can schedule tasks based on time, events, or triggers. FineDataLink also includes fault tolerance mechanisms, such as timeout limits and retry options, to ensure your data standardization best practices run smoothly.

Many organizations struggle with data silos and manual processes. Data silos occur when information is stored in isolated systems that do not communicate well. This leads to fragmented data and makes normalization difficult. Manual processes often result in errors and slow down your data practices. FineDataLink helps you overcome these challenges by providing centralized data repositories, automated data validation processes, and a unified data management framework.

When you automate your data standardization best practices with FineDataLink, you improve data quality, achieve greater consistency, and streamline your operations. You can use popular formulas to standardize data, automate data preprocessing, and ensure that your data is always ready for analysis. This approach supports better decision-making and helps your organization stay competitive.

Aligning departments is essential for achieving consistent data quality and enhanced quality across your organization. When you standardize data practices, you enable teams to integrate data more effectively and support better data analysis. Centralized data management helps you align initiatives with strategic goals and prioritize resources for the greatest impact.

| Strategy | Benefit |

|---|---|

| Centralized Data Management | Aligns initiatives with strategic goals and enables resource prioritization based on impact. |

A common challenge is the lack of alignment between departments, which can lead to ineffective solutions and poor data quality. You can address this by identifying internal champions who support your data strategy. Engage these champions to ensure buy-in and promote cross-functional standards for reference data, such as product codes or country codes.

Training your staff is critical for maintaining high data quality and supporting ongoing data analysis. You should customize training content to make it relevant to your organization’s needs. Interactive methods, such as e-learning, simulations, and group discussions, help staff engage with the material and retain knowledge. Diverse delivery methods, including in-person workshops and online modules, accommodate different learning styles and improve understanding of compliance requirements.

FineDataLink supports staff training by providing a user-friendly interface and detailed documentation. These tools make it easier for your team to learn how to use the right tools for data management and data analysis. When your staff understands the protocols, you achieve enhanced quality and consistency across all teams.

Continuous improvement is vital for sustaining data quality and enhanced quality. You should implement standardized data collection procedures to minimize errors. Regular audits help you identify and correct inaccuracies. Data validation techniques, such as cross-referencing and statistical analysis, allow you to spot outliers and maintain reliable data analysis.

| Strategy | Description |

|---|---|

| Standardized Data Collection | Minimizes errors and inconsistencies. |

| Regular Audits | Identifies and corrects inaccuracies. |

| Data Validation Techniques | Detects outliers and ensures accuracy. |

| Personnel Training | Reinforces the importance of accuracy in data collection. |

| Technology Solutions | Uses software for automated checks and error flagging. |

FineDataLink plays a key role in ongoing validation and governance. The platform offers secure data sharing through standardized APIs, structured mechanisms for data access, and robust management features. These right tools help you integrate data across teams, reduce duplication, and support continuous improvement in data quality and data analysis.

When you work on data standardization, you may encounter several common pitfalls that can slow progress or reduce the quality of your results. Recognizing these challenges early helps you take steps to avoid them and keep your project on track.

Inconsistent data standards can create confusion and lead to unreliable outcomes. You might see different departments using their own definitions, formats, or rules. This lack of alignment often results in fragmented metadata, operational inefficiencies, and increased costs. The table below shows how inconsistent standards can impact different industries:

| Industry | Impact of Inconsistent Data Standards |

|---|---|

| Building Materials | Fragmented metadata management and operational inefficiencies |

| Aerospace Components | Data inconsistencies, higher costs, and compliance challenges |

| Educational Institutions | Unreliable reporting and operational inefficiencies |

| Financial Advisory Firms | Poor data retrieval, analysis issues, and compliance risks |

You can avoid these issues by defining and documenting data standards, business rules, and workflows across all departments. A centralized data management system helps ensure everyone follows the same guidelines. Regular audits and clear communication support consistency and trust in your data.

Lack of buy-in from stakeholders is another frequent challenge. You may notice resistance to change, poor communication, or even mistrust among teams. These problems often arise when objectives are unclear or when people do not understand the benefits of standardization. Sometimes, trying to achieve too much at once or using outdated tools can also hinder progress.

To build support, start by clearly defining your goals and aligning them with broader business objectives. Offer ongoing training to build a culture of data literacy. Highlight the value of modern tools to end-users, making it easier for them to see the benefits. Encourage collaboration between IT and business teams to reduce conflicts and ensure everyone works toward the same outcomes.

Tip: Focus on small wins and communicate progress regularly. This approach helps build momentum and trust throughout your organization.

You can achieve measurable benefits by following data standardization best practices. Automation and ongoing monitoring help maintain data integrity and support reliable analytics. FineDataLink streamlines integration, reduces manual errors, and enables real-time insights.

| Benefit | Description |

|---|---|

| Improved collaboration | Teams work with the same data, enhancing teamwork. |

| Faster decision making | Consistent data allows for quicker, informed decisions. |

| Enhanced quality | Standardized data leads to higher reliability and accuracy. |

Start with a data audit, define clear standards, and request a FineDataLink demo to accelerate your journey.

Enterprise Data Integration: A Comprehensive Guide

What is enterprise data and why does it matter for organizations

Understanding Enterprise Data Centers in 2025

Enterprise Data Analytics Explained for Modern Businesses

The Author

Howard

Engineer Data Management & Ahli Data Research Di FanRuan

Related Articles

What is a data management platform in 2025

A data management platform in 2025 centralizes, organizes, and activates business data, enabling smarter decisions and real-time insights across industries.

Howard

Dec 22, 2025

Top 10 Database Management Tools for 2025

See the top 10 database management tools for 2025, comparing features, security, and scalability to help you choose the right solution for your business.

Howard

Dec 17, 2025

Best Data Lake Vendors For Enterprise Needs

Compare top data lake vendors for enterprise needs. See which platforms offer the best scalability, integration, and security for your business.

Howard

Dec 07, 2025