Efficient ETL pipelines have become crucial for modern businesses that rely on seamless data processing and integration. These pipelines automate the movement, transformation, and synchronization of data, ensuring that organizations can make better decisions based on accurate insights. The demand for scalable and user-friendly ETL tools continues to grow as companies face challenges like handling diverse data formats, reducing operational costs, and integrating data across multiple systems.

For example, implementing an ETL solution helped a manufacturing company integrate over 30 suppliers while cutting costs by 43%. With software like Dell Boomi AtomSphere offering pricing tiers ranging from $2,000 to $8,000 monthly, the need for cost-effective solutions becomes even more apparent. Choosing the right tools ensures smoother workflows, real-time data updates, and improved business intelligence. Since the astonishing power they have, how do ETL pipelines work? If you are interested in them , you can click and book a demo of FineDataLink, one of the most excellent ETL pipelines, which will help you get a better understanding of working way of ETL pipelines.

When selecting an ETL tool, scalability and performance should top your list of priorities. As your business grows, your data pipelines must handle increasing data volumes without compromising speed or accuracy. Look for tools that excel in load testing and benchmarking. These methods measure throughput, latency, and error rates, ensuring the tool can process large datasets efficiently.

Some ETL tools are designed to manage high data velocity and veracity, making them ideal for real-time data integration. They maintain data quality while processing updates quickly. For example, tools with schema-on-read capabilities adapt to changing data structures, which is essential for businesses dealing with diverse data formats. By choosing a scalable solution, you future-proof your data integration processes and ensure consistent performance under varying workloads.

A user-friendly interface can significantly reduce the learning curve for your team. Modern ETL tools often feature drag-and-drop functionality, visual data mapping, and no-code options. These features simplify complex data integration tasks, making them accessible even to non-technical users.

For instance, some popular open-source ETL tools provide intuitive dashboards that allow you to monitor data pipelines in real time. This ease of use not only boosts productivity but also minimizes errors during data transformation and processing. When evaluating tools, prioritize those with detailed documentation and step-by-step tutorials. These resources empower your team to maximize the tool's potential without extensive training.

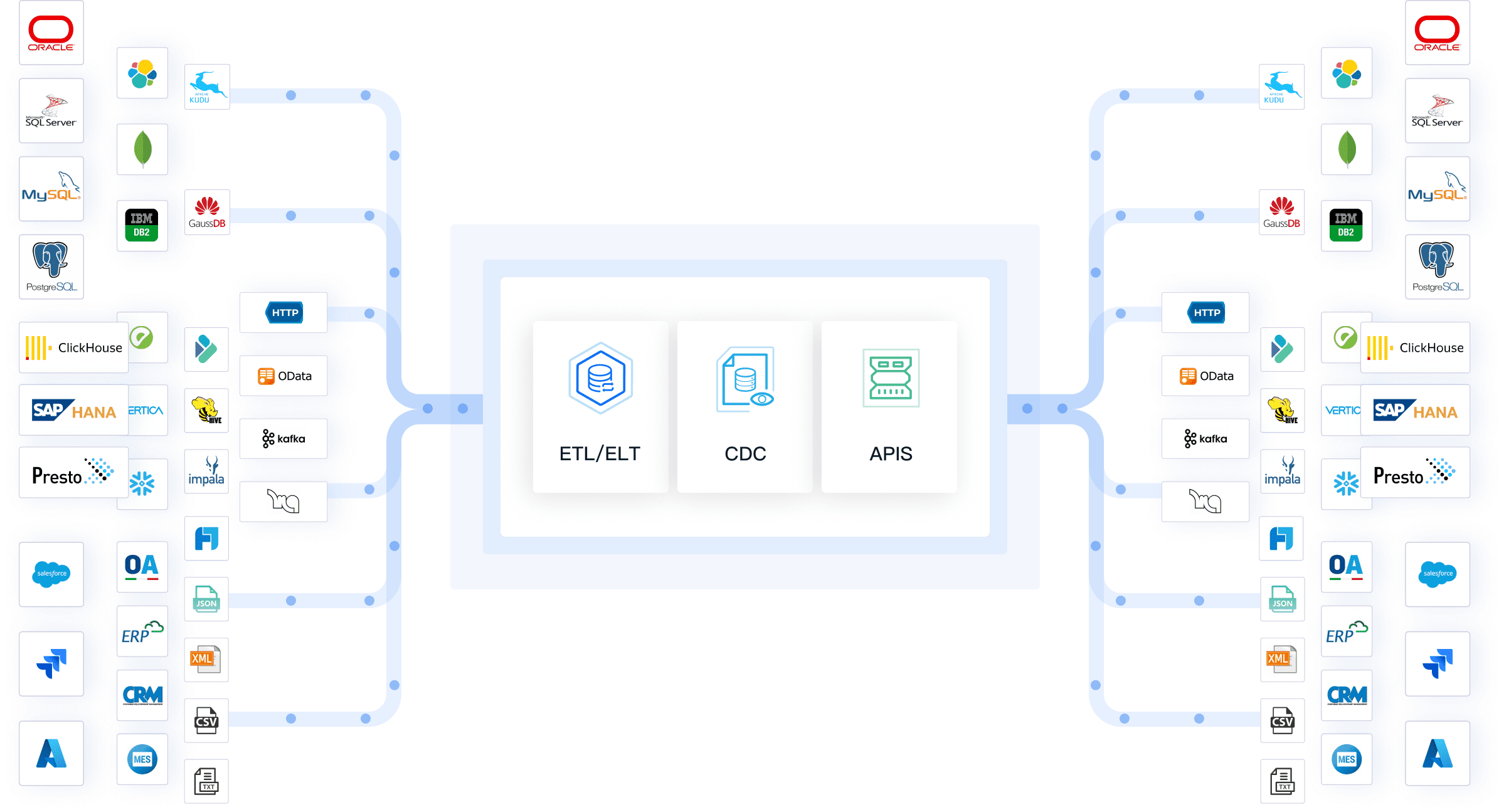

Effective ETL tools must seamlessly connect with various data sources and platforms. Whether you're working with on-premise databases, cloud systems, APIs, or streaming platforms, the tool should support diverse connectivity options. This flexibility ensures smooth data flow across your organization's ecosystem.

For example, some ETL tools offer compatibility with popular analytics tools and data warehouses, enabling holistic data analysis. A comparative evaluation of open-source ETL tools reveals that those with robust integration capabilities often outperform their counterparts. By choosing a tool with extensive connectivity, you streamline your data integration platforms and enhance overall efficiency.

When evaluating ETL tools, understanding their cost and licensing options is essential. These tools often come with different pricing models, which can significantly impact your budget. You should consider the following factors to make an informed decision:

For example, some open source ETL tools offer free licensing but may require additional investment in support and customization. On the other hand, enterprise-grade ETL tools often include robust support and scalability features, justifying their higher price tags. By carefully analyzing these aspects, you can choose a cost-effective solution that aligns with your data integration needs.

The availability of support and community resources plays a crucial role in the success of your ETL implementation. A strong support system ensures that you can resolve issues quickly and keep your data pipelines running smoothly.

Many ETL tools provide professional support through dedicated teams or service-level agreements (SLAs). This type of support is particularly valuable for businesses with complex data integration requirements. Additionally, tools with active user communities offer a wealth of shared knowledge. Forums, blogs, and online tutorials can help you troubleshoot problems and discover best practices.

Popular open-source ETL tools often rely on community-driven support. While this can be cost-effective, it may not always provide the immediate assistance you need. In contrast, enterprise ETL tools typically include 24/7 support and detailed documentation, ensuring a smoother experience.

When choosing an ETL tool, evaluate the quality of its support and the size of its user community. Tools with extensive resources, such as step-by-step guides and video tutorials, can reduce your reliance on external help. For instance, FineDataLink offers a low-code platform with drag-and-drop functionality and comprehensive documentation, making it easier for users to build and manage data pipelines. By prioritizing tools with robust support, you can enhance your team's productivity and ensure the long-term success of your data integration efforts.

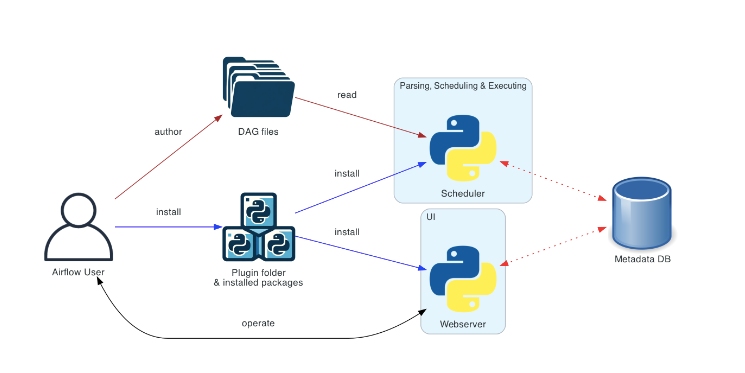

Apache Airflow stands out as one of the most popular open-source ETL tools for orchestrating complex workflows. Its flexibility allows you to design, schedule, and monitor workflows as directed acyclic graphs (DAGs). This tool is ideal for managing data pipelines that require high customization and scalability.

Airflow's robust performance metrics validate its capabilities. For example, it ensures data consistency through row count checks, NULL checks, and data type validation. These features help you maintain data quality across your pipelines. Additionally, its ability to monitor random data points over time enables anomaly detection, ensuring smooth data processing.

You can also benefit from Airflow's active community support. The tool's open-source nature means you have access to a wealth of shared knowledge, including forums, blogs, and tutorials. This makes it easier to troubleshoot issues and optimize your workflows.

Tip: Apache Airflow excels in environments where you need to manage recurring schedules and complex dependencies. Its performance testing capabilities help establish baselines and track improvements over time.

Talend Data Integration is a versatile ETL tool that simplifies data integration tasks with its user-friendly interface and powerful features. It supports both ETL and ELT processes, giving you the flexibility to perform transformations either in-pipeline or in-destination.

One of Talend's key strengths lies in its ability to handle schema evolution automatically. This reduces manual intervention and ensures your data pipelines adapt to changing data structures. Talend also supports multi-destination loading, allowing you to load data into multiple systems simultaneously.

When compared to competitors like Informatica and Microsoft SQL Server Integration Services (SSIS), Talend offers a balance between cost and functionality. While some alternatives like Hevo Data and Stitch provide cheaper options, Talend's comprehensive feature set justifies its pricing for businesses with complex data integration needs.

Note: Talend's extensive library of connectors and compliance with regulations like GDPR make it a reliable choice for organizations prioritizing data security and governance.

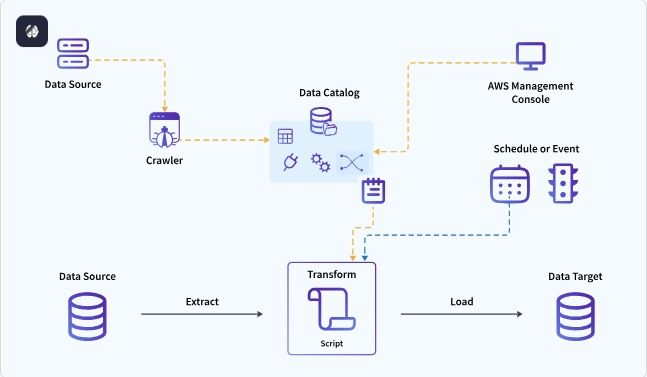

AWS Glue is a cloud-based ETL tool designed to simplify data integration and processing in the AWS ecosystem. Its serverless architecture eliminates the need for infrastructure management, allowing you to focus on building and optimizing your data pipelines.

AWS Glue's scalability and performance make it one of the top ETL tools for 2025. It has been shown to reduce reporting times by 50%, enabling faster access to critical insights. The tool also improves operational efficiency by allowing teams to query and visualize data from multiple sources seamlessly.

Future updates to AWS Glue are expected to enhance its job orchestration tools and improve processing times for large-scale data jobs. These advancements will make it even easier for you to manage complex ETL workflows.

Tip: AWS Glue is an excellent choice if you're already using AWS services. Its seamless integration with the AWS ecosystem ensures a smooth data flow across your applications.

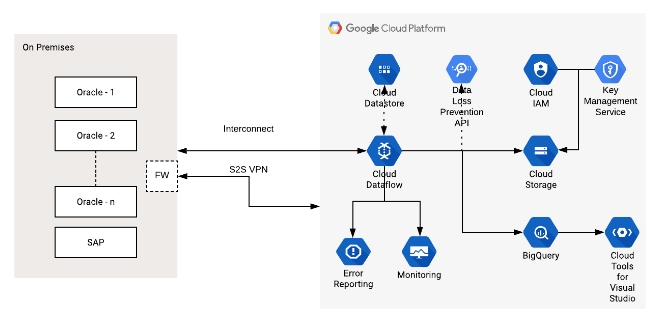

Google Cloud Dataflow is a fully managed, cloud-based ETL tool designed for real-time and batch data processing. It excels in automating the execution of data pipelines, making it a top choice for businesses seeking scalability and efficiency. With its serverless architecture, you can focus on building robust data workflows without worrying about infrastructure management.

One of the standout features of Google Cloud Dataflow is its ability to handle both streaming and batch data processing. This dual capability ensures that your data pipelines can process real-time updates while managing historical data efficiently. For example, you can use it to analyze live customer interactions while simultaneously running reports on past trends.

The tool integrates seamlessly with other Google Cloud services, such as BigQuery and Cloud Storage, enabling a cohesive data ecosystem. Its support for Apache Beam SDK further enhances its flexibility, allowing you to write pipelines in multiple programming languages like Java and Python. This makes it an excellent choice for teams with diverse technical expertise.

Tip: If your organization relies heavily on Google Cloud services, Google Cloud Dataflow can streamline your data integration and processing tasks, ensuring a unified and efficient workflow.

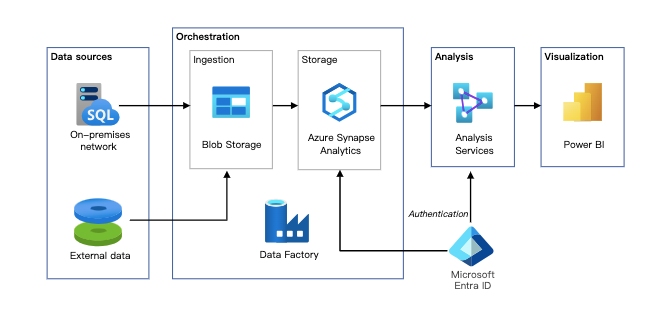

Microsoft Azure Data Factory (ADF) is a cloud-based ETL tool that simplifies data integration and transformation across various platforms. Its pay-as-you-go pricing model ensures cost-effectiveness, making it accessible for businesses of all sizes. You only pay for what you use, eliminating upfront costs and licensing fees.

ADF's user-friendly interface features drag-and-drop functionality, allowing even non-technical users to design and manage data pipelines. This ease of use reduces the learning curve and accelerates deployment. Additionally, its scalability ensures that your pipelines can handle growing data streams without additional expenses.

| Feature | Description |

|---|---|

| Cloud-Based | Eliminates the need for costly on-premise infrastructure, operating entirely in the Azure cloud. |

| Scalability | Adapts to growing data streams without additional costs, handling data of any size efficiently. |

| Ease of Use | User-friendly interface with drag-and-drop functionality, accessible to non-coders. |

| Cost-Effectiveness | Pay-as-you-go pricing model ensures you only pay for what you use, making it competitive. |

Microsoft manages the Azure Integration Runtime, which simplifies deployment and ensures efficient data movement across regions. This fully managed approach reduces complexity and enhances performance monitoring. ADF also supports a wide range of data sources, including on-premise databases, cloud platforms, and APIs, making it a versatile choice for data integration.

Note: ADF's combination of scalability, cost-effectiveness, and ease of use makes it one of the top ETL tools for businesses looking to optimize their data pipelines.

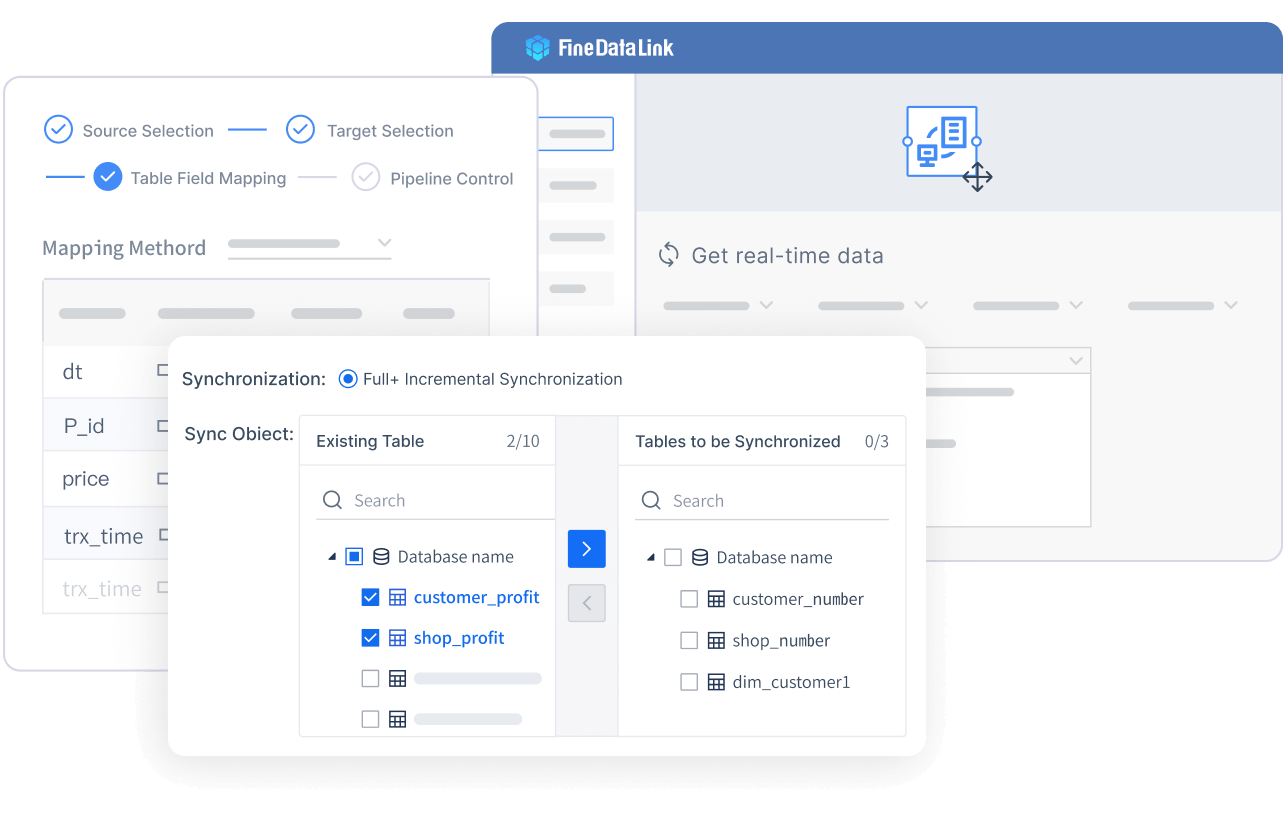

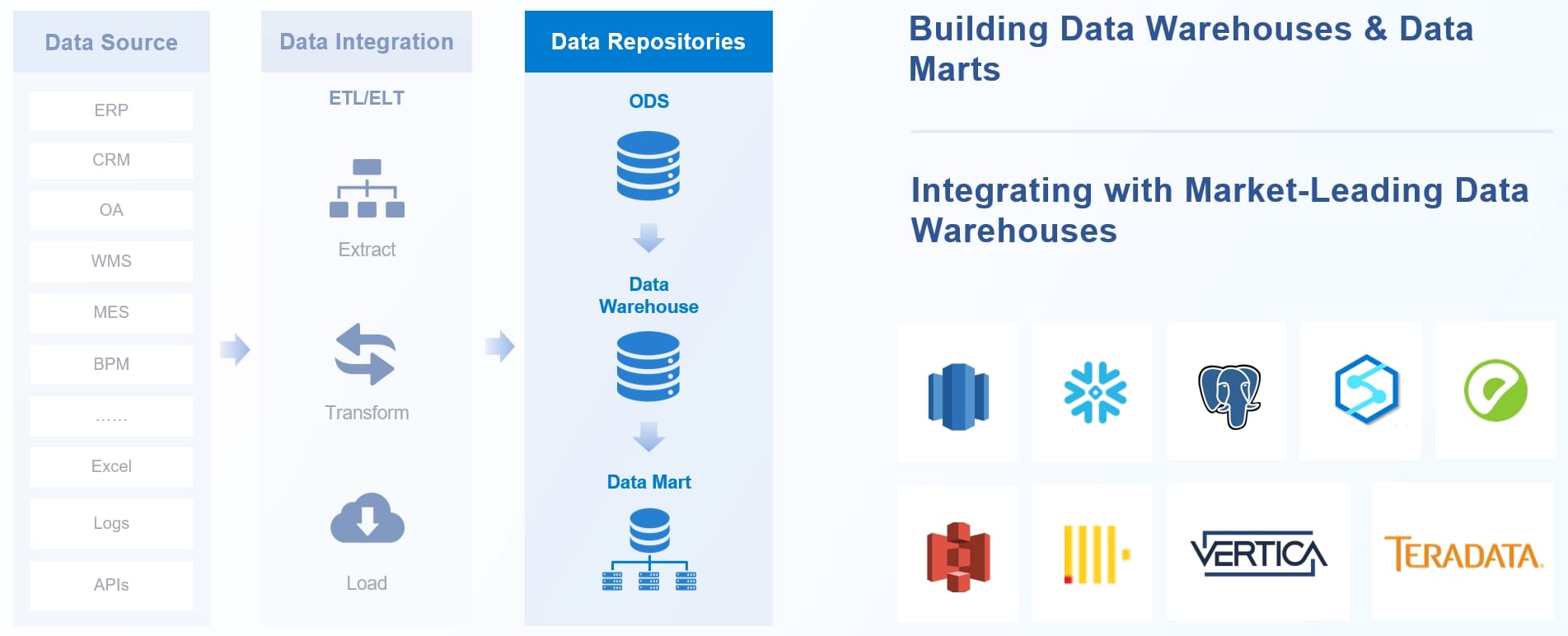

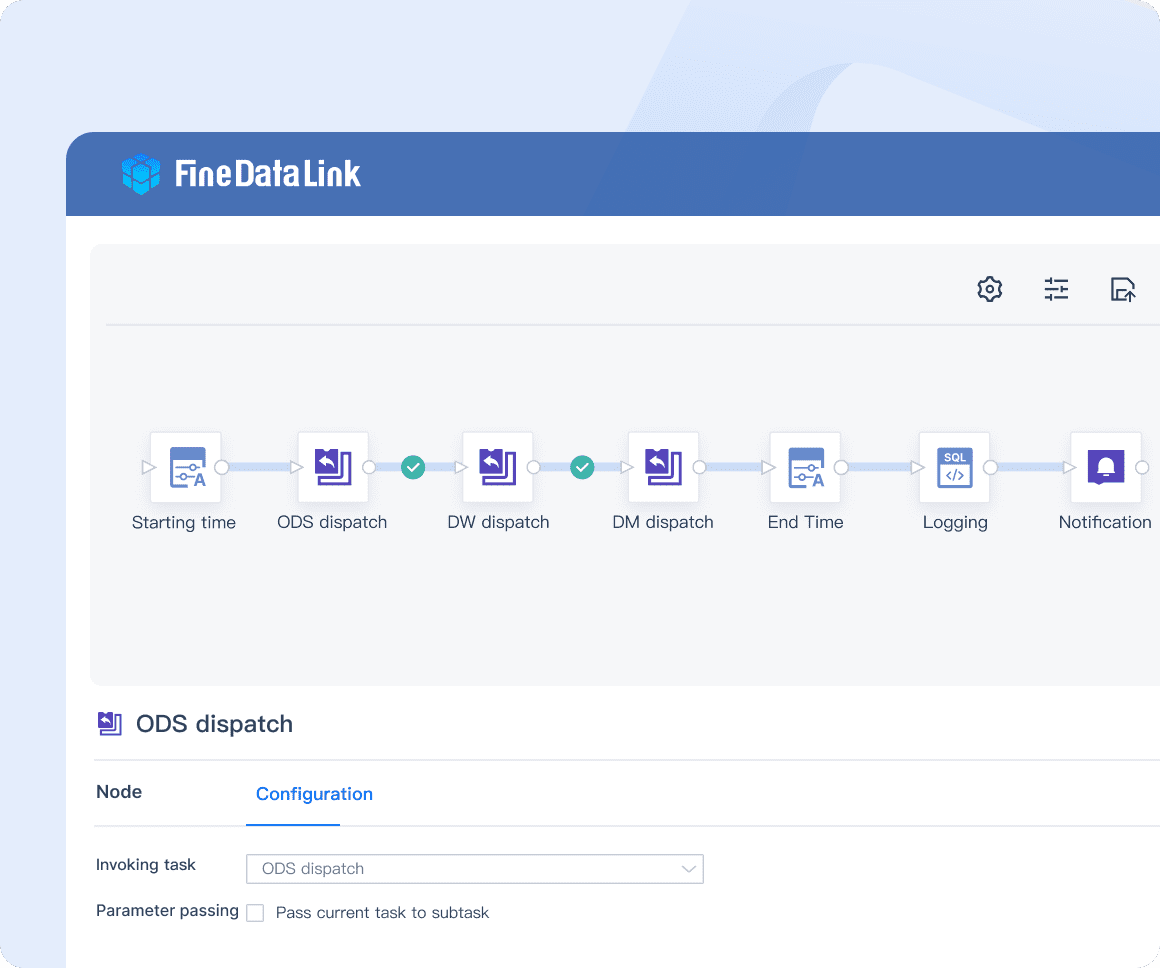

FineDataLink is an enterprise-level data integration platform designed to address the challenges of modern data workflows. It combines real-time data synchronization, advanced ETL/ELT capabilities, and API integration into a single, low-code solution. This makes it an ideal choice for businesses aiming to streamline their data pipelines and enhance operational efficiency.

One of FineDataLink's core strengths is its ability to synchronize data across multiple tables in real time with minimal latency. This feature is particularly useful for database migration, backup, and building real-time data warehouses. Additionally, its ETL/ELT functions allow you to preprocess data efficiently, ensuring high-quality outputs for analytics and reporting.

The platform's low-code interface simplifies complex data integration tasks. With drag-and-drop functionality and detailed documentation, you can build and manage data pipelines without extensive coding knowledge. FineDataLink also supports over 100 common data sources, enabling seamless integration across diverse systems.

Key features of FineDataLink include:

Callout: FineDataLink's visual and modern interface makes it 10,000 times more user-friendly than many other tools. Its cost-effective pricing and extensive connectivity options make it a standout choice for businesses dealing with complex data integration needs.

If you're looking for a comprehensive solution to build both offline and real-time data warehouses, FineDataLink offers the tools and flexibility you need. Its focus on automation and efficiency ensures that your data pipelines remain robust and scalable, even as your business evolves.

Understanding your business needs is the first step in selecting the right ETL tool. Start by identifying the specific challenges your organization faces with data integration. For example, do you need to process real-time data, or are you focused on batch processing? Clearly defining your requirements ensures that the tool you choose aligns with your goals.

A study comparing ETL tools highlights the importance of matching capabilities to business impact. Tools optimized for ETL processes can significantly enhance efficiency. For instance, a major retailer improved sales by over 5% by enhancing customer data quality. Similarly, a healthcare provider reduced processing times by 50% through better data accuracy.

Tip: Create a list of must-have features, such as real-time synchronization or API integration, to guide your decision-making process.

Your team's technical expertise plays a crucial role in determining the best ETL tool for your organization. If your team includes experienced developers, open source ETL tools like Apache Airflow may be a good fit. These tools offer flexibility but often require coding knowledge.

For teams with limited technical skills, low-code platforms like FineDataLink simplify data integration. These tools feature drag-and-drop interfaces and detailed documentation, making them accessible to non-technical users. By choosing a tool that matches your team's skill level, you can reduce training time and improve productivity.

Note: Consult with your team to understand their comfort level with different tools. This ensures a smoother implementation process.

Budget is a critical factor when selecting an ETL tool. Costs can vary widely depending on the tool's features and your organization's needs. For small projects, open source ETL tools may offer a cost-effective solution. However, these tools often require additional investment in support and customization.

For larger projects, enterprise-grade tools provide robust features but come with higher costs. For example, initial implementation for a medium-sized project can range from $15,000 to $30,000, with annual costs reaching $20,000. A detailed cost analysis helps you balance affordability with functionality.

| Category | Cost Range |

|---|---|

| Licensing | $0 (open source) |

| ~$50,000–$75,000 (annual) | |

| Infrastructure | Pay-per-use (~$10,000/year) |

| ~$25,000 (server setup) | |

| Total First-Year Cost | ~$18,000–$190,000 |

Callout: Consider long-term costs, including maintenance and scalability, to ensure your chosen tool remains viable as your business grows.

Testing ETL tools through free trials or demos is a smart way to evaluate their capabilities before committing to a purchase. Many vendors offer trial periods that allow you to explore the tool’s features, interface, and performance under real-world conditions. Taking advantage of these trials helps you identify whether the tool aligns with your business needs.

When testing a tool, focus on key aspects like ease of use, scalability, and integration capabilities. For example, try creating a small data pipeline to assess how intuitive the interface feels. If the tool offers drag-and-drop functionality, see how quickly you can build and deploy a workflow. Additionally, test its ability to connect with your existing data sources and platforms.

Tip: Use the trial period to simulate your most common data integration scenarios. This will give you a clear picture of how the tool performs in your specific environment.

Some tools, like FineDataLink, provide detailed documentation and step-by-step guides during the trial phase. These resources can help you understand the tool’s full potential and reduce the learning curve for your team. By thoroughly testing multiple tools, you can make an informed decision that minimizes risks and maximizes value for your organization.

Choosing an ETL tool isn’t just about solving immediate challenges. You need to ensure the tool aligns with your long-term data goals. Start by identifying how the tool can support your organization’s growth and evolving data needs. For instance, if you plan to expand your data sources or adopt real-time analytics, the tool must offer scalability and advanced features.

Long-term alignment also impacts your organization’s performance and financial outcomes. Tools that enhance data quality and accessibility can drive significant benefits. The table below highlights key metrics to consider:

| Metric | Description |

|---|---|

| Revenue Impact | Evaluates how data-driven insights help increase revenue streams or create new sales opportunities. |

| Reduced Downtime | Estimates how data insights reduce operational disruptions, improving efficiency and lowering costs. |

| Customer Retention | Shows how data can enhance customer loyalty and retention by identifying at-risk consumers. |

Callout: Tools like FineDataLink, with real-time synchronization and API integration, can help you achieve these metrics by ensuring seamless data flow and accessibility.

By aligning your ETL tool with long-term goals, you can future-proof your data strategy. This approach not only improves operational efficiency but also positions your organization for sustained success in a data-driven world.

Choosing the right ETL tool can transform how your organization handles data. Each tool offers unique strengths tailored to different needs:

As businesses adopt cloud technologies and real-time processing, the demand for scalable ETL tools continues to grow. Aligning your choice with your business goals ensures smoother workflows and better analytics. Evaluate tools based on scalability, ease of use, and integration capabilities to make an informed decision.

Tip: Tools like FineDataLink combine real-time synchronization and low-code interfaces, making them ideal for modern data integration needs.

Data Pipeline Automation: Strategies for Success

Data Pipelines vs ETL Pipelines Explained

Designing Data Pipeline Architecture: A Step-by-Step Guide

Top 10 Data Pipeline Tools for 2025: Enhance Your Data Strategy

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

What is Data Pipeline Management and Why It Matters

Data pipeline management ensures efficient, reliable data flow from sources to destinations, enabling businesses to make timely, data-driven decisions.

Howard

Mar 07, 2025

What is a Data Pipeline and Why Does It Matter

A data pipeline automates collecting, cleaning, and delivering data, ensuring accurate, timely insights for analysis and business decisions.

Howard

Mar 07, 2025

2025 Data Pipeline Examples: Learn & Master with Ease!

Unlock 2025’s Data Pipeline Examples! Discover how they automate data flow, boost quality, and deliver real-time insights for smarter business decisions.

Howard

Feb 24, 2025