An ETL data pipeline is a powerful tool that extracts, transforms, and loads data, ensuring seamless data management. You rely on these pipelines to streamline data processing, making it efficient and less error-prone. They play a crucial role in maintaining data quality, enabling informed decisions based on unified datasets. With tools like FineDataLink, you can automate data movement, reducing manual intervention and human errors. FineBI further enhances your ability to analyze and visualize data, empowering you to make strategic decisions with confidence.

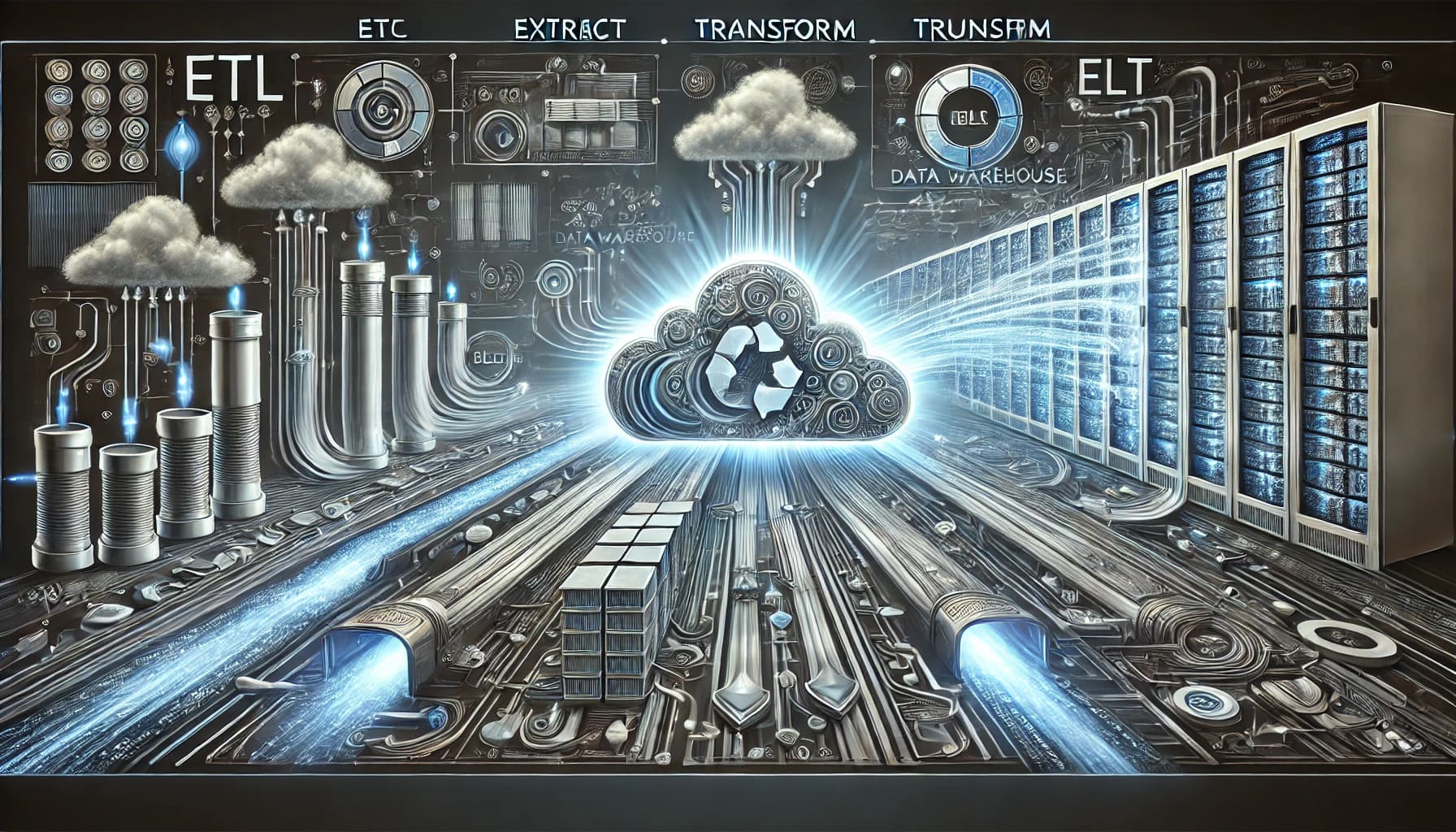

Understanding how an ETL data pipeline operates is crucial for effective data management. This process involves three main phases: Extract, Transform, and Load. Each phase plays a vital role in ensuring that your data is ready for analysis and decision-making.

In the extract phase, you gather data from various sources. These sources can include databases, cloud services, or even flat files. The goal is to collect all relevant data needed for analysis. You rely on ETL tools to automate this process, ensuring that data extraction is efficient and accurate. By doing so, you minimize errors and save time.

Once you have extracted the data, the next step is transformation. During this phase, you convert the raw data into a format suitable for analysis. This involves cleaning, filtering, and applying business rules to the data. You might also aggregate or summarize data to make it more meaningful. The transformation phase ensures that your data is consistent and reliable, which is essential for generating accurate insights.

The final phase is loading the transformed data into a data warehouse or another centralized repository. This step allows you to store and manage your data efficiently. By having a single source of truth, you can easily access and analyze your data whenever needed. The load phase completes the ETL process, enabling you to leverage your data for business intelligence, analytics, and machine learning.

"ETL is a widely used data integration technique that involves three core elements: Extract, Transform, and Load."

By understanding each phase of the ETL process, you can effectively manage your data and gain valuable insights. This knowledge empowers you to make informed decisions and drive strategic growth.

You can achieve seamless data integration with an ETL data pipeline. It automates the process of extracting, transforming, and loading data from various sources into a centralized data warehouse. This automation reduces manual intervention and minimizes human errors. By integrating data from multiple sources, you create a unified dataset that provides a comprehensive view of your business operations. This unified approach enables you to make informed decisions based on accurate and consistent data.

Maintaining high data quality becomes easier with an ETL data pipeline. The transformation phase of the ETL process involves cleaning and filtering data, ensuring that only relevant and accurate information gets loaded into your data warehouse. You can apply business rules to standardize data formats and remove duplicates, enhancing the reliability of your datasets. High-quality data is crucial for generating meaningful insights and supporting effective decision-making.

An ETL data pipeline significantly boosts efficiency in data processing. By automating the extraction, transformation, and loading stages, you streamline the data management workflow. This automation allows you to schedule data updates and ensure consistent and reliable data transfers. You save time and resources by eliminating manual data handling tasks. The efficiency gained through ETL automation enables you to focus on analyzing data and deriving actionable insights, rather than getting bogged down by data processing tasks.

"ETL automation drives efficiency in data extraction, transformation, and loading stages by using connectors, applying complex transformations, and loading data without disruption."

By leveraging the benefits of an ETL data pipeline, you enhance data integration, improve data quality, and increase efficiency. These advantages empower you to harness the full potential of your data, driving strategic growth and informed decision-making.

Creating an ETL data pipeline involves several critical steps. Each step ensures that your data pipeline functions efficiently and effectively. Let's explore these steps in detail.

You begin by planning and designing your ETL data pipeline. This phase involves understanding your data sources and identifying the data you need to extract. You also define the transformations required to convert raw data into a usable format. Consider the destination where you will load the data, such as a data warehouse or a database.

"Effective planning and design lay the foundation for a successful ETL data pipeline."

Once you have a solid plan, you move on to implementation. This step involves setting up the tools and technologies needed to build your pipeline. You might use ETL tools like FineDataLink, which offers a low-code platform for easy data integration.

"Implementation is where your ETL pipeline comes to life, transforming your data into valuable insights."

After implementing your ETL pipeline, you deploy it into a production environment. This phase involves monitoring and maintaining the pipeline to ensure it runs smoothly. Regular maintenance helps you address any issues that arise and optimize performance.

"Deployment and maintenance ensure that your ETL pipeline remains robust and reliable over time."

By following these steps, you can build an ETL data pipeline that meets your data management needs. Proper planning, implementation, and maintenance empower you to harness the full potential of your data, driving informed decision-making and strategic growth.

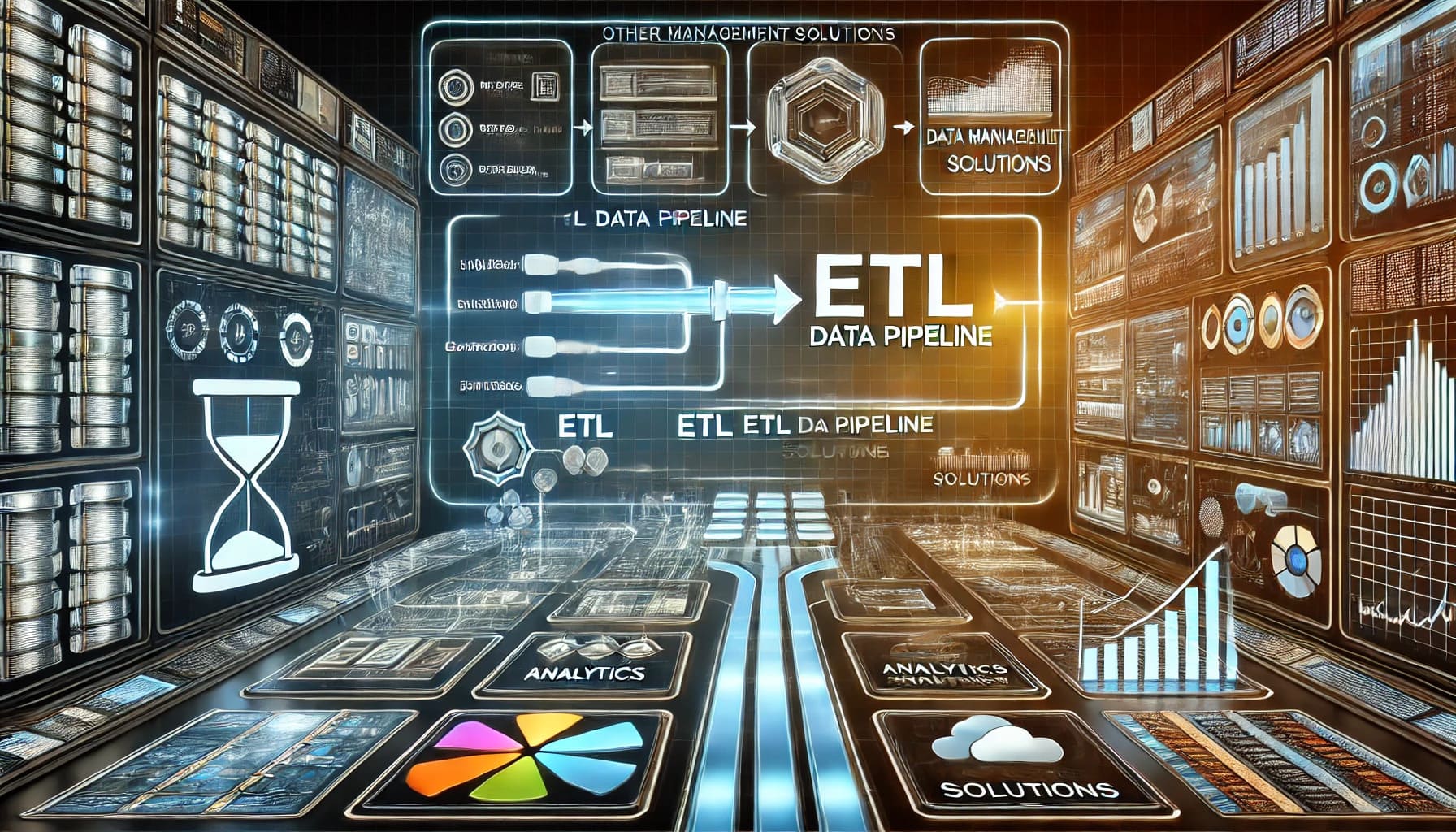

When exploring data management solutions, you may encounter various options. Understanding the differences between ETL and other solutions helps you choose the right approach for your needs.

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are two distinct data processing methods. Both involve extracting data from sources, but they differ in the sequence of operations.

ETL Process:

ELT Process:

"ETL requires data transformation before loading, while ELT transforms data after loading."

Choosing between ETL and ELT depends on your infrastructure and data processing needs. ETL suits scenarios where data quality is paramount before analysis. ELT benefits from the computational power of cloud-based data warehouses, making it ideal for handling large datasets.

While ETL is a type of data pipeline, not all data pipelines follow the ETL process. Understanding this distinction helps you select the right tool for your data strategy.

ETL Data Pipelines:

General Data Pipelines:

"ETL plays a critical role in data integration by ensuring structured data for analysis."

When deciding between ETL and other data pipeline solutions, consider your data types, processing requirements, and desired outcomes. ETL remains a robust choice for structured data preparation, while general data pipelines offer versatility for diverse data needs.

You find ETL data pipelines in various industries, each benefiting from their unique capabilities. In the manufacturing sector, these pipelines pull data from production machines, supply chain systems, and ERP systems. This integration allows you to optimize production schedules and reduce delivery times. By analyzing data from multiple sources, you can identify bottlenecks and streamline operations.

In the healthcare industry, ETL data pipelines play a crucial role in managing patient records and clinical data. You can extract data from electronic health records (EHRs) and transform it into a standardized format. This process ensures that healthcare providers have access to accurate and up-to-date information, improving patient care and treatment outcomes.

Retail businesses also rely on ETL data pipelines to manage inventory and customer data. By integrating data from point-of-sale systems and online platforms, you gain insights into customer preferences and purchasing patterns. This information helps you tailor marketing strategies and optimize stock levels, enhancing customer satisfaction and boosting sales.

"ETL data pipelines enable seamless data integration across various industries, driving efficiency and informed decision-making."

Consider a case study from the financial sector. A leading bank implemented an ETL data pipeline to manage transaction data from multiple branches. By transforming and loading this data into a centralized system, the bank improved fraud detection and compliance reporting. The pipeline enabled real-time monitoring of transactions, allowing the bank to respond swiftly to suspicious activities.

In another example, a global logistics company used an ETL data pipeline to integrate data from its fleet management systems. By analyzing vehicle performance and route data, the company optimized delivery routes and reduced fuel consumption. This approach not only lowered operational costs but also minimized the environmental impact of its operations.

A telecommunications company faced challenges in managing customer data from various sources. By deploying an ETL data pipeline, the company consolidated customer information into a single database. This integration improved customer service by providing agents with a comprehensive view of customer interactions and preferences.

"Real-world case studies demonstrate the transformative power of ETL data pipelines in enhancing operational efficiency and decision-making."

By exploring these industry applications and case studies, you can see how ETL data pipelines drive innovation and efficiency across different sectors. These examples highlight the versatility and impact of ETL solutions in solving complex data challenges.

FanRuan plays a pivotal role in enhancing your data management capabilities through its innovative solutions. By leveraging FanRuan's tools, you can streamline your data processes and gain valuable insights.

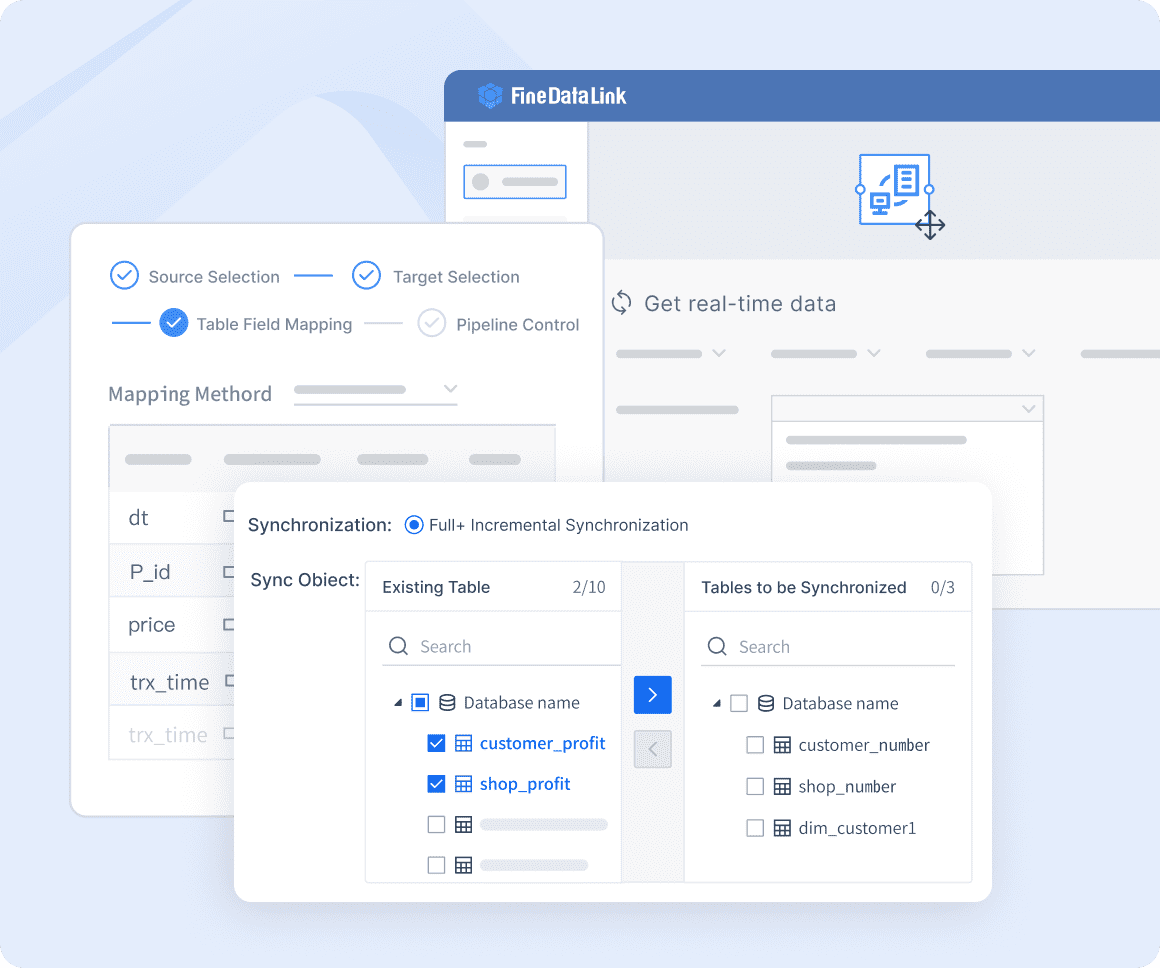

FineDataLink stands out as a comprehensive platform designed to simplify complex data integration tasks. You can use it to automate the extraction, transformation, and loading of data, ensuring seamless data flow across various systems. The platform's low-code approach allows you to build efficient ETL processes without extensive coding knowledge. With drag-and-drop functionality, you can easily design data workflows that meet your specific needs.

"FineDataLink empowers you to automate data movement, reducing manual intervention and human errors."

By utilizing FineDataLink, you can enhance your data integration processes, ensuring that your data is accurate and ready for analysis.

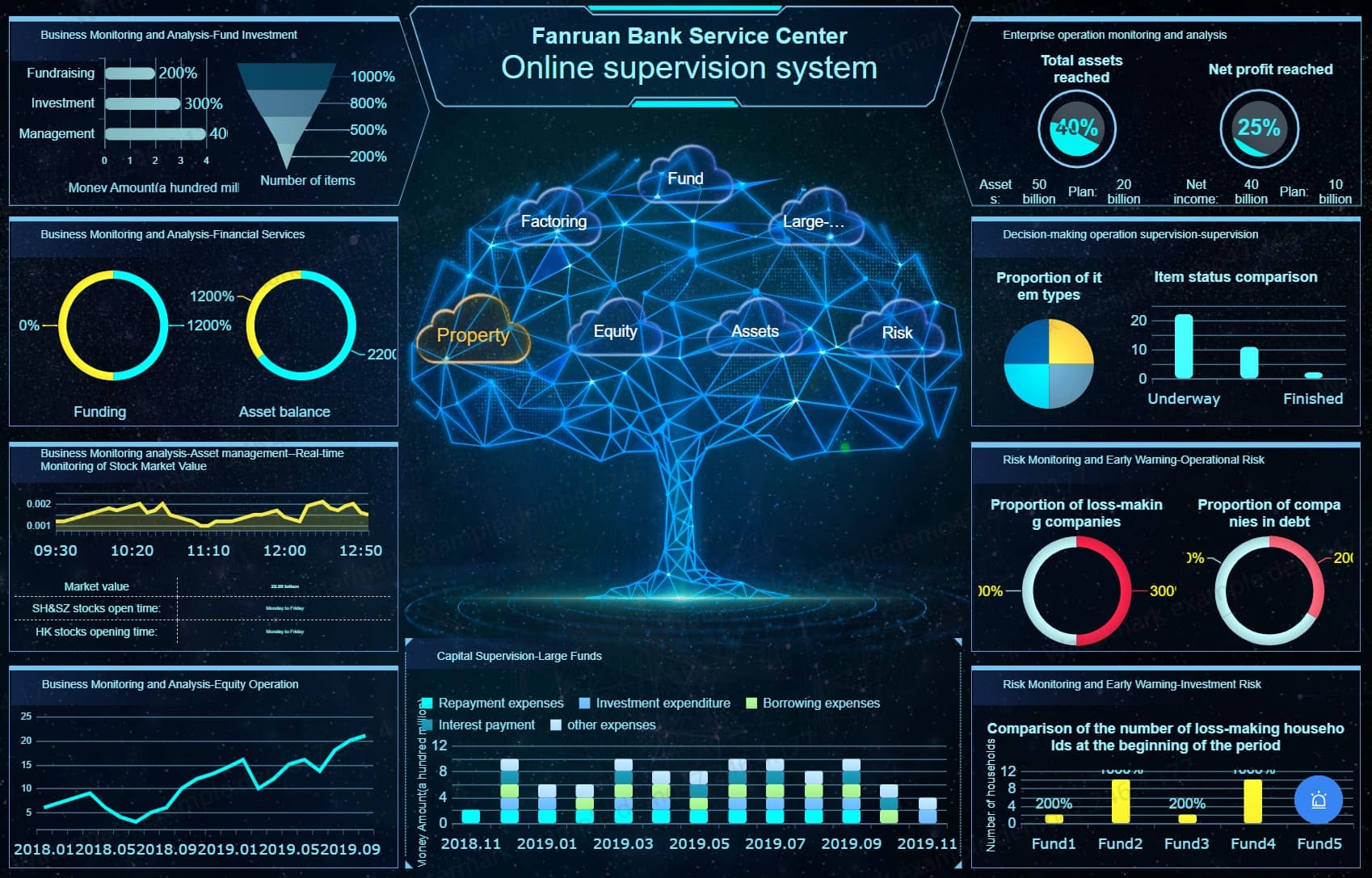

FineBI complements your ETL efforts by providing a powerful platform for data analysis and visualization. It enables you to transform raw data into actionable insights, facilitating informed decision-making. With FineBI, you can connect to various data sources and create interactive dashboards that offer a comprehensive view of your business operations.

"FineBI transforms raw data into insightful visualizations, empowering you to make strategic decisions with confidence."

By integrating FineBI into your data strategy, you can unlock the full potential of your data, driving business growth and innovation.

When you embark on implementing an ETL data pipeline, several key considerations come into play. These factors ensure that your pipeline operates efficiently and securely, providing reliable data for analysis.

Selecting the appropriate tools forms the backbone of a successful ETL data pipeline. You need tools that align with your specific data needs and infrastructure. Consider the following when choosing ETL tools:

"Extraction, transformation, and loading (ETL) processes are the foundation of every organization's data management strategy." — ProjectPro

By carefully selecting the right tools, you lay a strong foundation for your ETL data pipeline, enhancing its reliability and efficiency.

Data security remains a top priority when implementing an ETL data pipeline. Protecting sensitive information from unauthorized access and breaches is crucial. Here are some strategies to ensure data security:

"The reliability and efficiency of these processes will enhance by implementing a set of best practices for managing ETL data pipelines." — ProjectPro

By prioritizing data security, you protect your organization's valuable information, ensuring that your ETL data pipeline remains robust and trustworthy.

Click the banner below to experience FineBI for free and empower your enterprise to convert data into productivity!

Mastering Data Pipeline: Your Comprehensive Guide

How to Build a Spark Data Pipeline from Scratch

Data Pipeline Automation: Strategies for Success

Understanding AWS Data Pipeline and Its Advantages

Designing Data Pipeline Architecture: A Step-by-Step Guide

How to Build a Python Data Pipeline: Steps and Key Points

When you explore ETL data pipelines, you might have several questions. Here are some frequently asked questions to help you understand ETL pipelines better.

1. What is an ETL data pipeline?

An ETL data pipeline is a process that extracts data from various sources, transforms it into a structured format, and loads it into a data warehouse. This process ensures that your data is ready for analysis and decision-making.

2. Why is ETL important in data management?

ETL plays a crucial role in data management by ensuring data quality and integration. It helps you clean, validate, and standardize data before loading it into a centralized repository. This process enhances data accuracy and reliability, enabling informed decisions.

Survey Results: ETL pipelines significantly improve data quality and integration, leading to better decision-making and regulatory compliance.

3. How does ETL differ from ELT?

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) differ in the sequence of operations. In ETL, you transform data before loading it into a data warehouse. In ELT, you load data first and then transform it using the warehouse's processing power. ETL suits scenarios where data quality is paramount, while ELT leverages modern data warehouses' scalability.

4. What are the benefits of using an ETL data pipeline?

ETL data pipelines offer several benefits, including:

5. Can ETL pipelines handle real-time data?

Traditional ETL pipelines focus on batch processing. However, modern ETL tools like FineDataLink support real-time data synchronization, allowing you to keep your data up-to-date across multiple platforms.

6. What tools can I use to build an ETL data pipeline?

You can use various tools to build an ETL data pipeline. FineDataLink offers a low-code platform with drag-and-drop functionality, making it easy to design and manage data workflows. Choose tools that align with your data needs and infrastructure.

7. How do I ensure data security in an ETL pipeline?

To ensure data security, you should:

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

Best Software for Creating ETL Pipelines This Year

Discover the top ETL pipelines tools for 2025, offering scalability, user-friendly interfaces, and seamless integration to streamline your data pipelines.

Howard

Apr 29, 2025

What is Data Pipeline Management and Why It Matters

Data pipeline management ensures efficient, reliable data flow from sources to destinations, enabling businesses to make timely, data-driven decisions.

Howard

Mar 07, 2025

What is a Data Pipeline and Why Does It Matter

A data pipeline automates collecting, cleaning, and delivering data, ensuring accurate, timely insights for analysis and business decisions.

Howard

Mar 07, 2025