Data validation is the cornerstone of maintaining database integrity. It ensures the accuracy and reliability of data, laying a strong foundation for informed decision-making. By eliminating errors and discrepancies, data validation guarantees that every piece of information is trustworthy and usable. As we delve deeper into this topic, we will explore the significance of data validation in upholding the quality of databases and discuss key strategies for implementing effective validation processes.

In the realm of database management, data validation stands as a critical process that ensures the accuracy and quality of data. By checking data against specific rules or criteria to verify integrity and correctness, data validation plays a pivotal role in maintaining the reliability of information. This meticulous process is essential for preventing errors and discrepancies, ultimately upholding the integrity of databases.

In summary, data validation is crucial for maintaining data accuracy, quality, and integrity, facilitating compliance, informed decision-making, error prevention, efficient operations, and enhanced customer satisfaction. It is an essential component of effective data management strategies in today's data-driven business environment.

When it comes to data validation, there are various approaches that organizations can leverage to ensure the accuracy and reliability of their datasets. Understanding the distinct types of data validation techniques is essential for implementing robust processes that uphold data integrity and quality.

Ensuring that data adheres to specific formats is a fundamental aspect of syntactic data validation. By defining the expected structure of data inputs, organizations can validate information based on predetermined patterns or layouts. This meticulous process involves verifying that each data entry aligns with the established format requirements, thereby minimizing errors and inconsistencies in databases.

Incorporating syntax rules into data validation processes enhances the precision and correctness of data handling procedures. By establishing clear guidelines for how data should be structured and organized, organizations can enforce consistency and accuracy across all datasets. Validating syntax rules ensures that data entries meet predefined criteria, promoting uniformity and reliability in information management.

Semantic data validation focuses on verifying the logical relationships and dependencies within datasets. By assessing the coherence and integrity of data elements, organizations can identify any discrepancies or contradictions that may impact decision-making processes. Validating logical consistency ensures that data sets are logically sound and free from internal conflicts or incongruities.

Integrating business rules into data validation practices aligns data handling processes with organizational objectives and requirements. By incorporating specific business logic into validation procedures, organizations can tailor validations to suit their unique operational needs. Validating against business rules ensures that data complies with industry standards, regulatory guidelines, and internal policies, fostering compliance and alignment with strategic goals.

As organizations continue to prioritize data validation as a cornerstone of database integrity, understanding the nuances of syntactic and semantic validations becomes paramount. By implementing comprehensive data validation strategies encompassing both syntactic checks and semantic evaluations, businesses can fortify their databases against inaccuracies, errors, and inconsistencies.

When it comes to data validation, organizations have a plethora of techniques and tools at their disposal to ensure the accuracy and reliability of their datasets. By implementing robust validation processes, businesses can uphold data integrity and quality, paving the way for informed decision-making and streamlined operations.

Manual validation involves human intervention in verifying the correctness and integrity of data entries. This hands-on approach allows individuals to meticulously inspect data sets, identify errors, and rectify inconsistencies manually. While manual validation requires time and effort, it offers a personalized touch to the validation process, enabling data analysts to address nuanced issues that automated tools may overlook.

On the other hand, automated validation leverages software tools and algorithms to validate data swiftly and efficiently. By automating validation processes, organizations can streamline error detection, reduce manual intervention, and enhance overall data quality. Automated validation tools are designed to perform checks rapidly across large datasets, ensuring that data meets predefined standards with minimal human involvement.

Data validation tools play a pivotal role in improving the efficiency of data validation processes. These software solutions offer a range of functionalities to expedite error identification, streamline validation tasks, and enhance overall data quality. Some popular validation tools include:

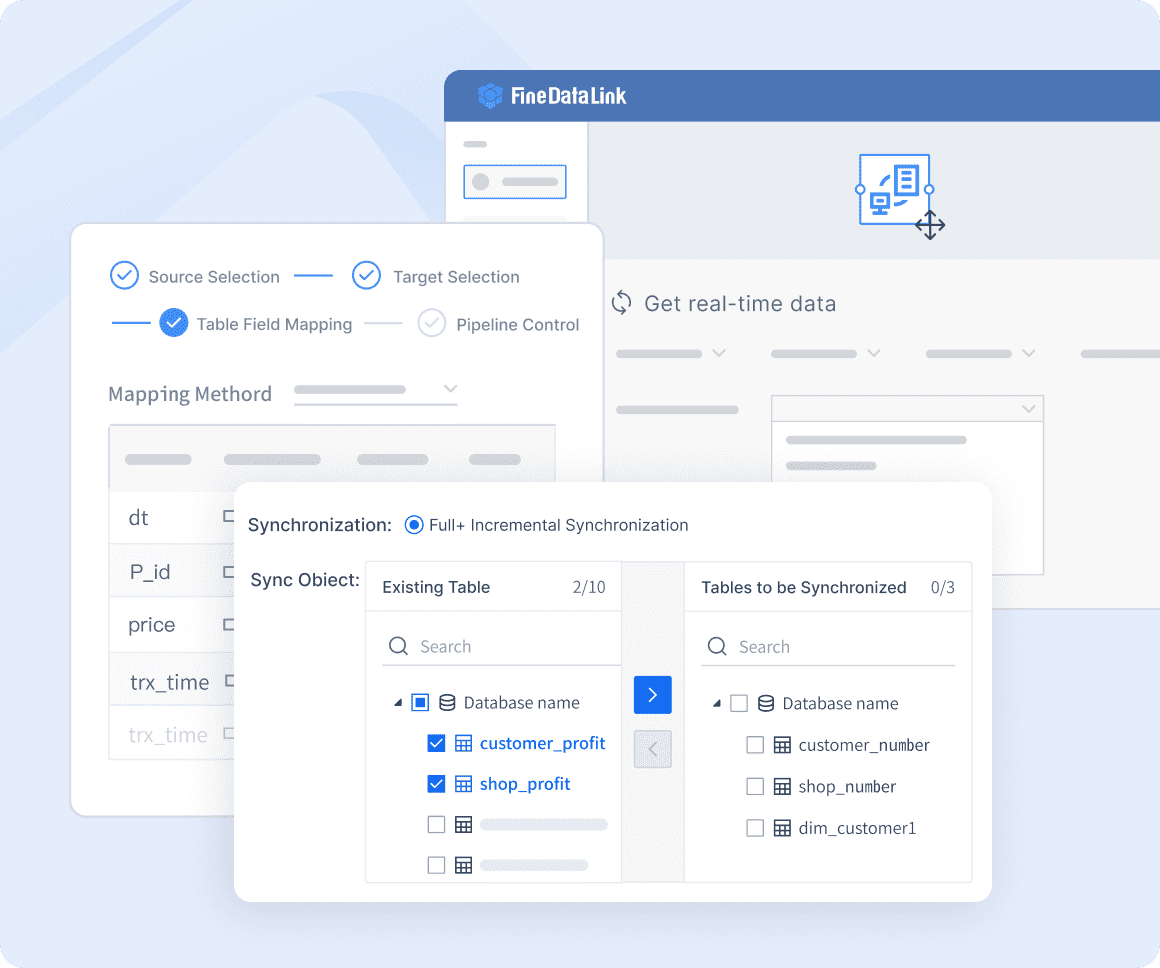

FineDataLink: As a modern and scalable data integration solution, FineDataLink addresses the challenges of data integration, data quality, and data analytics through three core functions: real-time data synchronization, ETL/ELT, and APIs.

FineDataLink can synchronize data across multiple tables in real time with minimal latency, typically measured in milliseconds. Therefore, you can utilize FineDataLink for tasks such as database migration, backup, and the construction of real-time data warehouses.

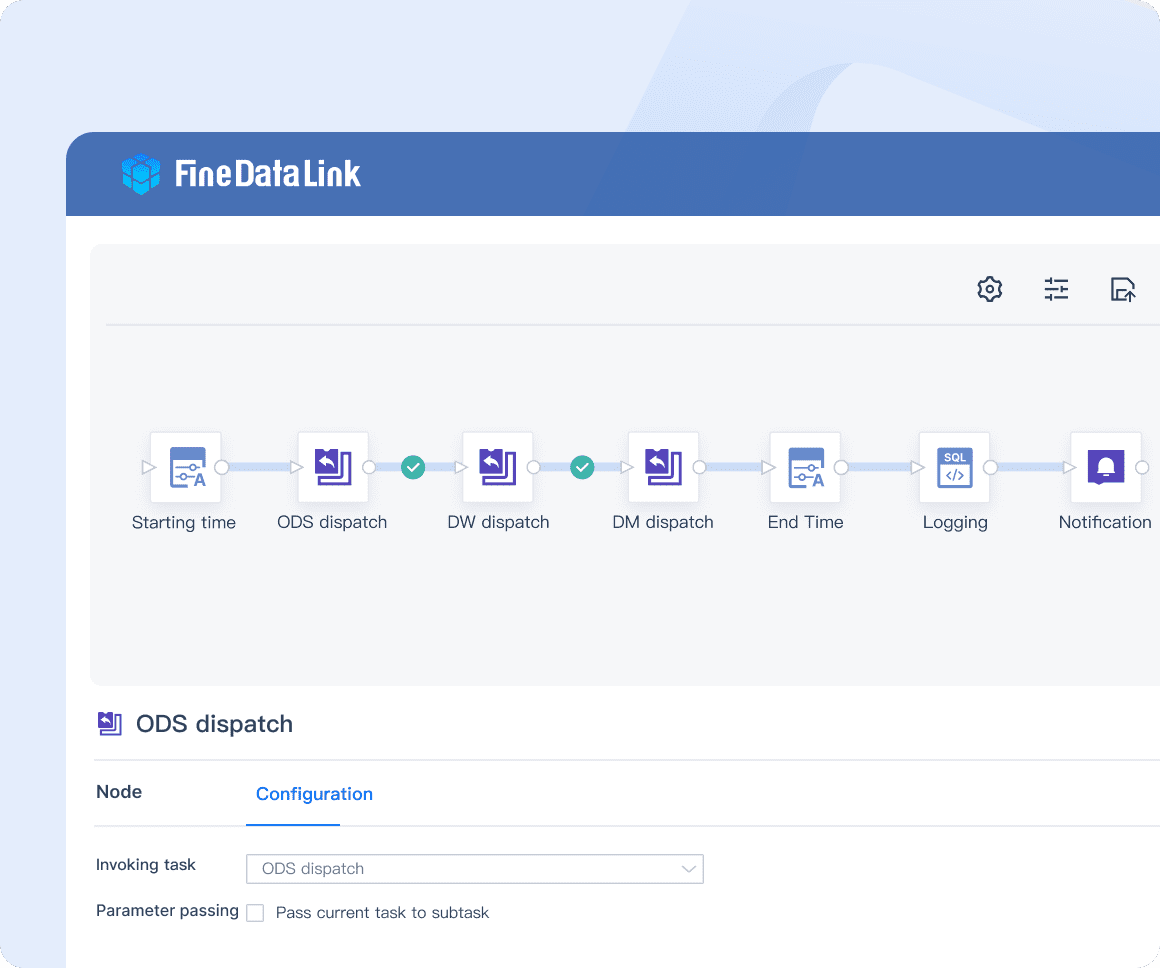

Timing data calculation and synchronization are among its core functions. It can be employed for data preprocessing and functions as an ETL tool for constructing data warehouses.

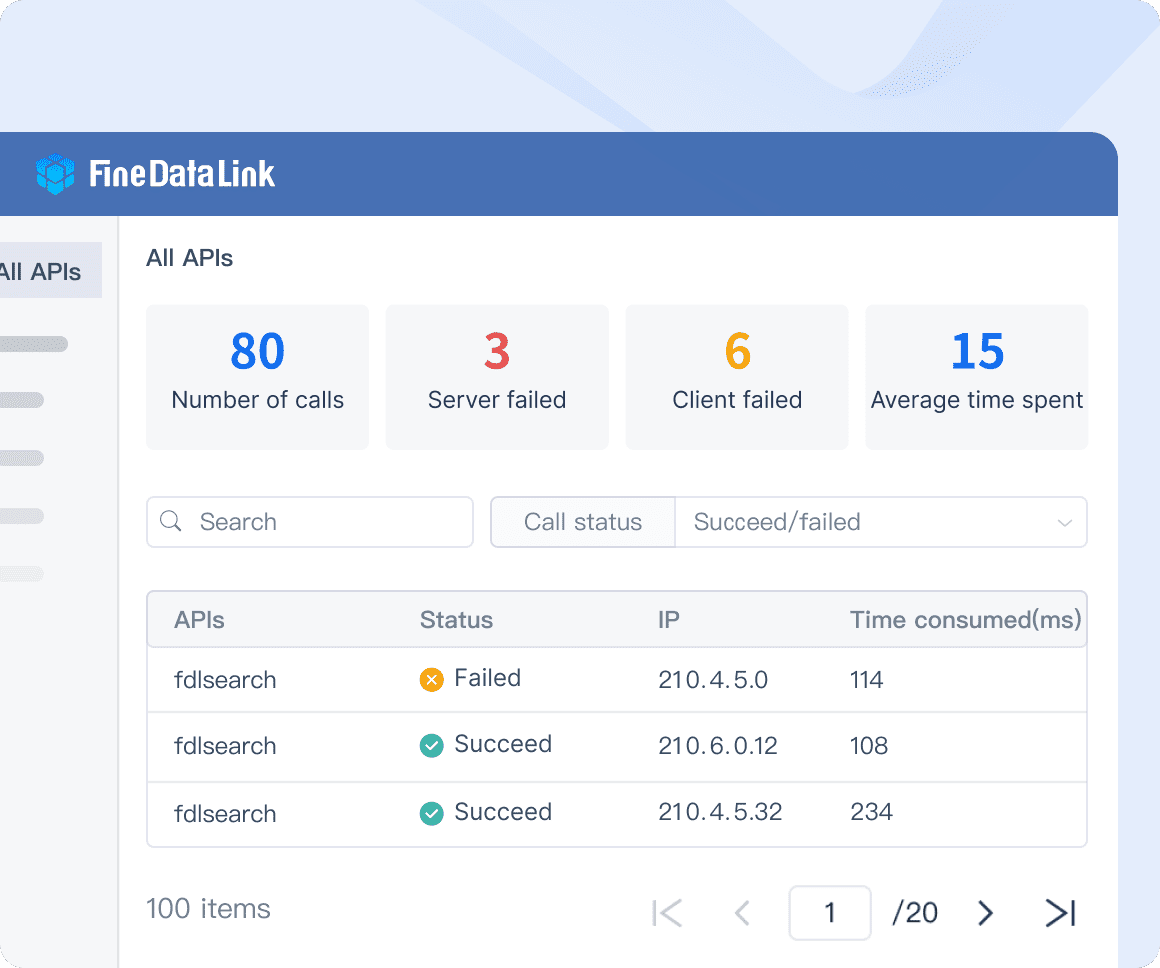

An API interface can be developed and deployed within 5 minutes, without the need for coding. This capability is highly versatile and can be extensively utilized for data sharing between various systems, particularly SaaS applications.

Transform your data integration experience! Click the banner below to try FineDataLink for free and see how seamless data management can be.

Data Validator Pro: A comprehensive tool that provides customizable validation rules for different data types.

Validation Master: An intuitive platform that automates the validation process for seamless error detection.

Data Integrity Checker: A robust tool that ensures data consistency and accuracy through advanced validation algorithms.

By incorporating these validation tools into their workflows, organizations can expedite error detection, save valuable time, and bolster the integrity of their databases effectively.

In some cases, organizations may require tailored validation solutions to meet specific business needs or industry requirements. Custom validation solutions are designed to align with unique data structures, formats, or regulations that standard tools may not accommodate. These bespoke solutions offer flexibility in defining validation rules, handling complex datasets, and addressing niche challenges within an organization's data ecosystem.

When considering custom validation solutions, organizations should collaborate with experienced developers or vendors who specialize in creating personalized data validation frameworks. By investing in custom solutions tailored to their exact specifications, businesses can optimize their data validation processes for maximum efficiency and accuracy. Enterprises like FanRuan, which have been deeply involved in the field of data for over a decade, are the best choice.

As businesses continue to prioritize accurate and reliable data management practices, implementing effective data validation techniques alongside advanced validation tools remains paramount. By combining manual expertise with automated efficiency and leveraging customized solutions where necessary, organizations can fortify their databases against errors while maintaining high standards of data integrity.

As organizations continue to navigate the evolving landscape of data management, the future of data validation emerges as a pivotal component in ensuring the accuracy and reliability of information assets. By embracing emerging trends and best practices in data validation, businesses can fortify their databases against errors and discrepancies, fostering a culture of data-driven decision-making and operational excellence.

In the realm of data validation, two prominent trends are poised to revolutionize how organizations approach data quality assurance: AI and Machine Learning. These cutting-edge technologies offer advanced capabilities in automating validation processes, streamlining error detection, and enhancing overall data accuracy. By leveraging AI-driven algorithms and machine learning models, businesses can expedite data validation tasks, reduce manual intervention, and improve the efficiency of their data management workflows.

Moreover, the advent of real-time validation represents a paradigm shift in how organizations verify the integrity and consistency of their datasets. Real-time validation mechanisms enable instantaneous error detection and correction, allowing businesses to address issues proactively and maintain up-to-date data quality standards. By implementing real-time validation protocols, organizations can ensure that their databases remain accurate, reliable, and responsive to dynamic operational requirements.

Ensuring data validation is a critical practice for maintaining the accuracy and reliability of information. By validating data against specific criteria, organizations can prevent errors, maintain quality, and identify crucial outliers. This process serves as a cornerstone for insightful analytics, enabling businesses to obtain accurate results and make informed decisions based on trustworthy data. Embracing the future of data validation with advanced technologies like AI and Machine Learning will further enhance error detection processes and streamline data management workflows.

At this juncture, the imperative is to select the finest tools and rely on the most reputable professional vendors. By adhering to these guidelines for selecting and implementing data validation tools, coupled with best practices for data validation and management, businesses can effectively leverage the potential of these transformative solutions to address their evolving data requirements. Taking these factors into consideration, FineDataLink emerges as a prime option.

Click the banner below to experience FineDataLink for free and empower your enterprise to convert data into productivity!

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

How to Use Data Validation in Excel Effectively

Master data validation in Excel to control data entry, prevent errors, and ensure accuracy. Learn effective techniques for rules, dropdowns, and alerts.

Lewis

May 12, 2025

Mastering Advanced Excel Data Visualization Techniques

Master advanced Excel data visualization techniques to transform complex datasets into compelling visual stories and enhance your data analysis skills.

Lewis

Nov 25, 2024

Step-by-Step Guide to Excel Data Validation Techniques

Master Excel data validation with step-by-step techniques to enhance data accuracy and prevent errors. Learn basic and advanced methods for optimal results.

Howard

Aug 18, 2024