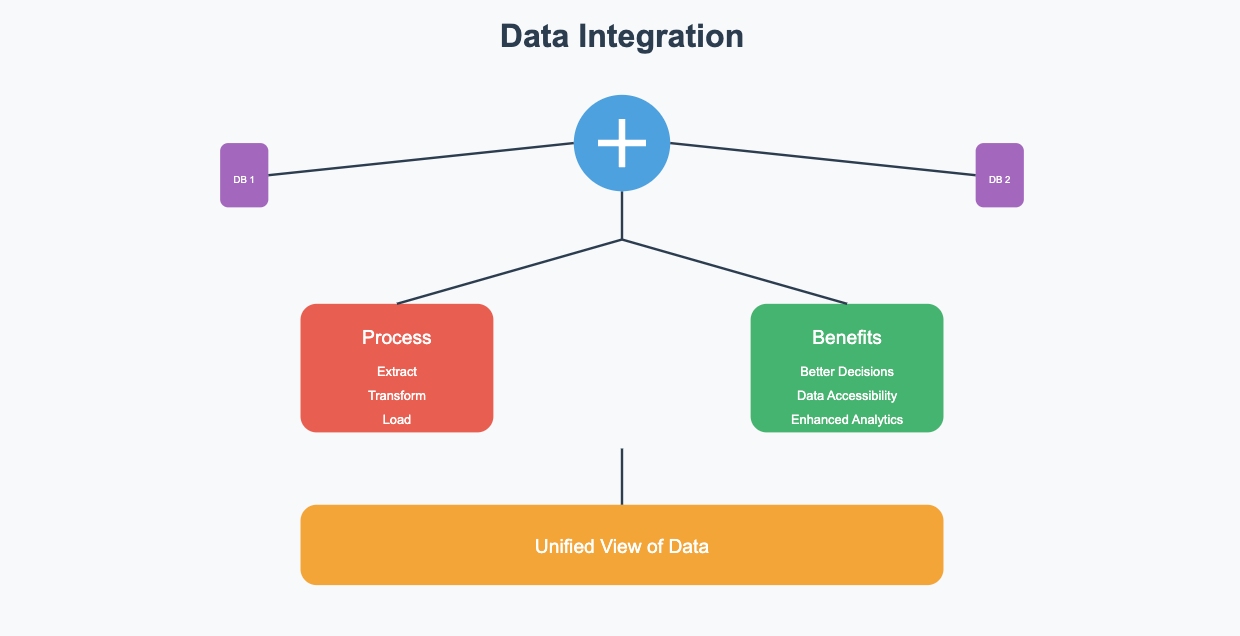

Data integration is the process of merging data from various sources to create a unified view. Its significance lies in improving decision-making, enhancing data accessibility, and supporting analytics initiatives. This blog provides an in-depth exploration of data integration, covering its definition, historical context, processes, tools, benefits, challenges, and future trends. By understanding the essence of data integration, readers can grasp its pivotal role in modern data-driven environments.

Data integration is the process of combining data from different sources to provide a unified view or to enable comprehensive analysis. The technical perspective delves into the intricate mechanisms involved in merging data from diverse sources.

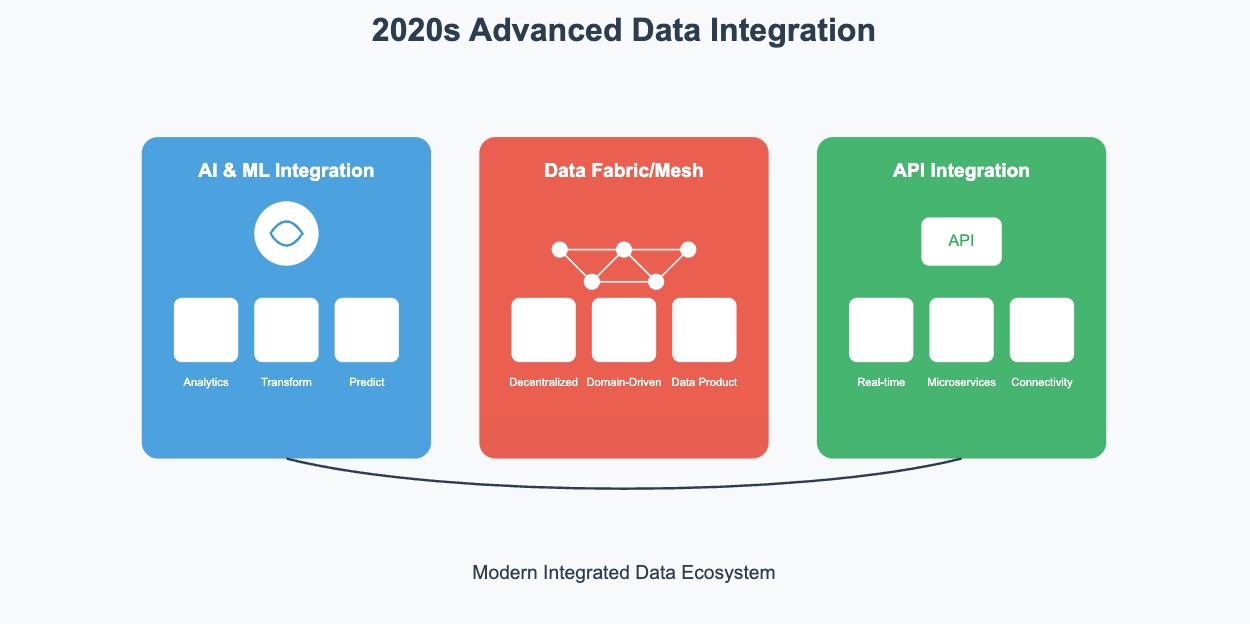

In recent years, cloud-based integration platforms like AWS Glue and Azure Data Factory have become pivotal for real-time data analytics, offering seamless scalability and reduced infrastructure management. These platforms not only support data from traditional databases but also integrate unstructured data sources, such as social media or sensor data, enabling businesses to analyze complex data streams in real time. Furthermore, modern architectures like Data Mesh and Data Fabric are gaining traction for their ability to decentralize data management, promoting domain-oriented ownership and providing more agility in data access and integration across large organizations.

This process, often facilitated by cutting-edge software tools, ensures that information flows seamlessly across systems. In the e-commerce industry, companies use data integration to combine customer data from various touchpoints (website, mobile app, CRM systems) to deliver personalized shopping experiences and targeted marketing campaigns. Additionally, financial institutionsintegrate data from multiple sources such as transaction systems, external financial reports, and market data to provide better risk management and fraud detection capabilities.

On the other hand, from a business standpoint, data integration embodies the harmonization of disparate datasets to create a unified and coherent narrative for organizational decision-making.

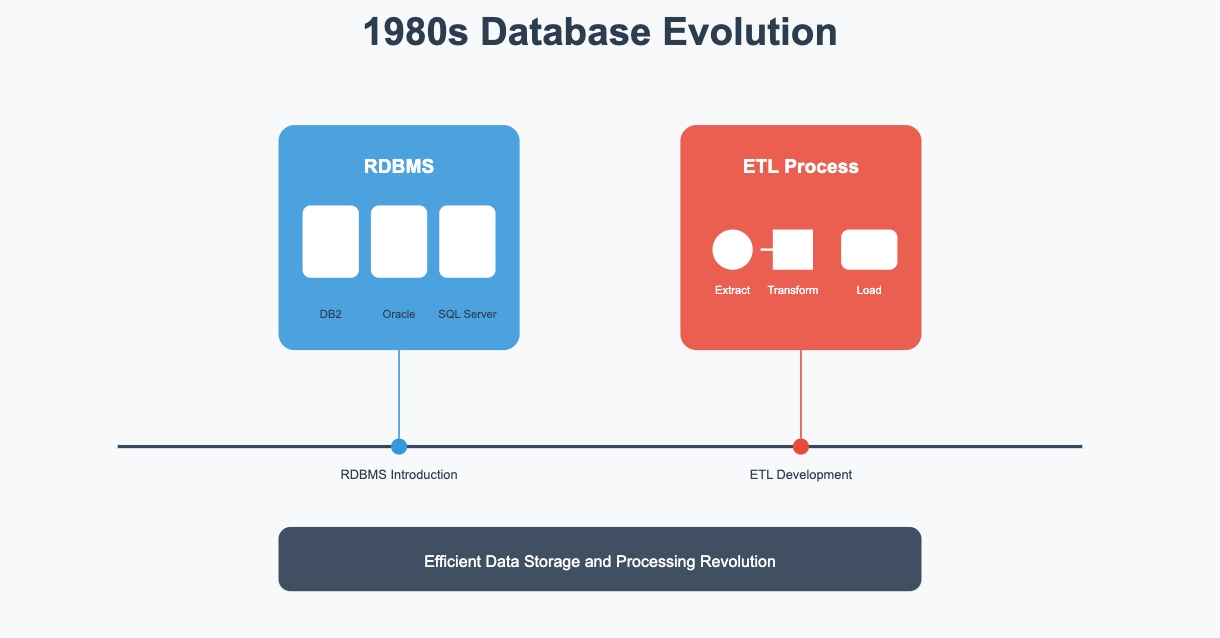

The historical context of data integration reflects the evolution of technology and business needs over several decades. This history is marked by key developments that have shaped how organizations manage and utilize data today.

The evolution of data integration reflects the ongoing advancements in technology and the increasing complexity of business data needs. From the early days of isolated data silos and batch processing to the sophisticated, real-time, and AI-driven integration solutions of today, data integration has become a critical component of modern data management strategies. This evolution has enabled organizations to harness the full potential of their data, driving better insights, efficiency, and innovation.

In contemporary times, data integration stands as a linchpin in driving operational efficiency and informed decision-making across industries. Modern applications harness its power to synchronize information streams from multiple sources, fostering a cohesive ecosystem for analysis and interpretation. For instance, in healthcare, data integration plays a crucial role in unifying patient records from disparate systems to enhance care coordination and clinical outcomes.

Data integration involves several key processes that work together to combine data from various sources into a unified, consistent, and accessible format. These processes ensure that the integrated data is accurate, reliable, and useful for analysis, reporting, and decision-making. Here are the primary processes of data integration:

Effective data integration begins with the meticulous process of data collection. Businesses employ various methods to gather information from disparate sources, ensuring a comprehensive dataset for analysis and decision-making. The diverse data sources utilized range from structured databases to unstructured sources like social media feeds and IoT devices. By amalgamating these varied inputs, organizations can derive valuable insights and drive strategic initiatives.

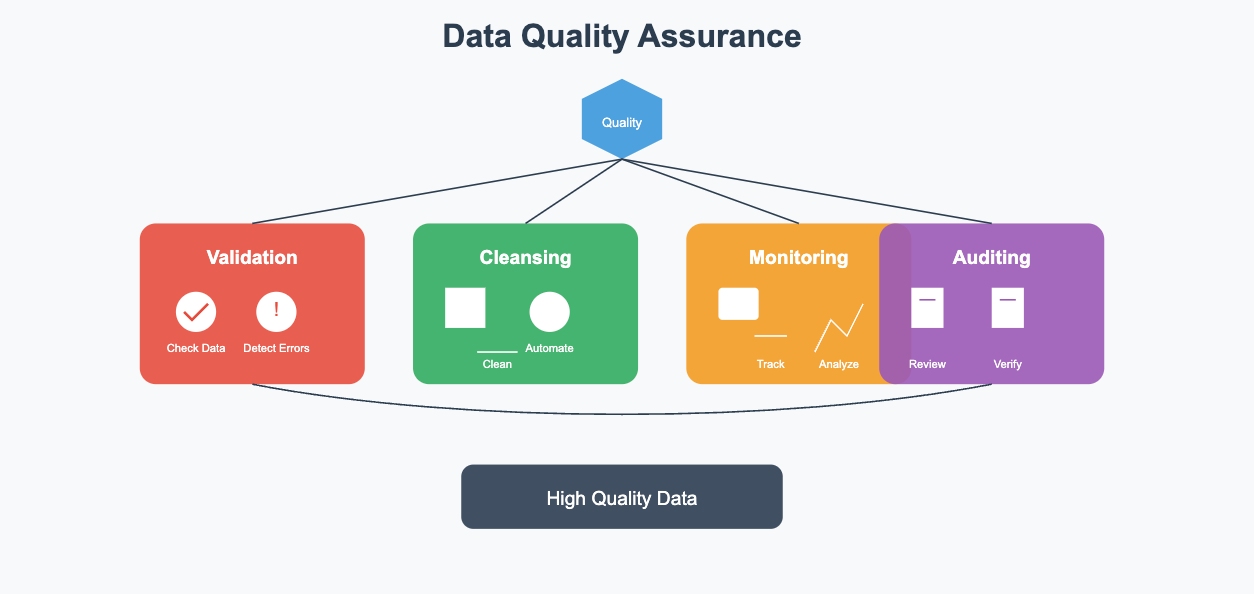

The subsequent phase in the data integration journey involves data mapping, a critical process that establishes relationships between different datasets. This step lays the foundation for aligning information across systems, enabling seamless data flow and interoperability. Additionally, data cleansing plays a pivotal role in enhancing data quality by identifying and rectifying inconsistencies or errors within the datasets. Through meticulous transformation processes, businesses ensure that their integrated data is accurate, reliable, and conducive to informed decision-making.

For example, a leading retail company utilized machine learning algorithms during the data transformation phase to automatically clean and categorize customer purchase data. This not only improved the accuracy of their product recommendations but also reduced the manual labor involved in data preprocessing by 40%. This illustrates the growing role of AI in data transformation, as it allows businesses to process large datasets more efficiently and derive actionable insights more quickly.

Once data has been ingested and transformed, the focus shifts towards combining data from various sources into a unified repository. This consolidation phase aims to create a cohesive dataset that provides a holistic view of organizational information. By merging disparate datasets and systems, businesses can generate actionable insights and facilitate cross-functional collaboration. Furthermore, the process of creating unified views enables stakeholders to access consolidated data effortlessly, fostering enhanced operational efficiency and strategic alignment.

Organizations leverage diverse data transfer methods to ensure seamless communication between systems and facilitate the exchange of information. One prevalent approach involves utilizing Application Programming Interfaces (APIs) to establish connections and enable data transmission in a structured manner. Another common practice is employing File Transfer Protocols (FTPs) to securely move data files between different platforms or servers. Additionally, some enterprises opt for Web Services such as Representational State Transfer (REST) APIs for efficient data sharing across web applications.

Upholding data quality standards is paramount in the realm of data integration, guaranteeing that information remains accurate, consistent, and reliable throughout the process. Businesses implement robust validation mechanisms to detect anomalies or discrepancies within datasets, ensuring that only high-quality data is integrated into their systems. Automated data cleansing tools play a pivotal role in identifying and rectifying errors, redundancies, or inconsistencies, thereby enhancing the overall integrity of integrated datasets. Regular audits and monitoring procedures are conducted to validate data accuracy and completeness, safeguarding against potential discrepancies or inaccuracies.

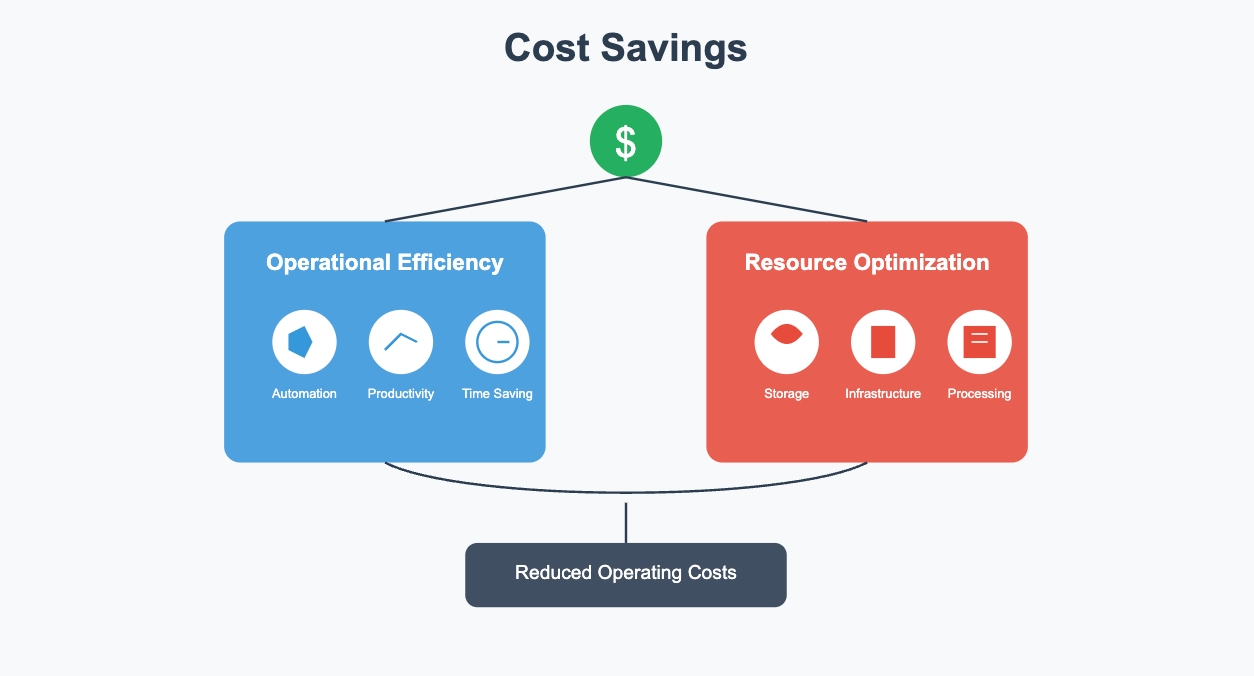

In today's data-driven world, the ability to effectively integrate data from various sources is crucial for organizations seeking to harness the full potential of their information assets. We'll then explore the benefits and challenges of data integration, highlighting how it can improve efficiency, enhance data quality, and provide competitive advantages, while also addressing the complexities and issues that organizations may face. Through understanding these aspects, you will gain insights into the strategic importance of data integration in modern business environments.

To address the challenges faced in data integration, modern tools are rapidly evolving, particularly in handling real-time data integration and ensuring data quality. For instance, Talend Data Fabric offers a robust integration platform for the financial sector, enabling seamless data flow across multiple sources and ensuring high-quality data through real-time synchronization and automated data cleansing. Additionally, Apache NiFi, an open-source tool, simplifies the data integration process by enabling stream-based processing across diverse systems, significantly reducing data latency and improving operational efficiency.

For example, a leading global bank utilized Talend Data Fabric to achieve real-time data synchronization across multiple data centers, which enhanced cross-platform decision-making capabilities and reduced financial risks associated with poor data quality.

This integration of emerging tools like Talend and Apache NiFi demonstrates how organizations can now address complex data integration challenges more effectively, ensuring better data accuracy, improved scalability, and faster decision-making.

Numerous tools are accessible for data integration, each crafted to tackle the aforementioned challenges presented by different facets of the integration process, spanning from extraction to transformation to loading. These tools differ in their functionalities, intended use cases, and complexity. Below are some of the most popular and widely-used data integration tools:

When it comes to data integration, several software tools have gained prominence for their robust capabilities and seamless functionalities. These tools serve as the backbone of data integration processes, enabling organizations to amalgamate data from disparate sources efficiently. Some popular software tools in this domain include:

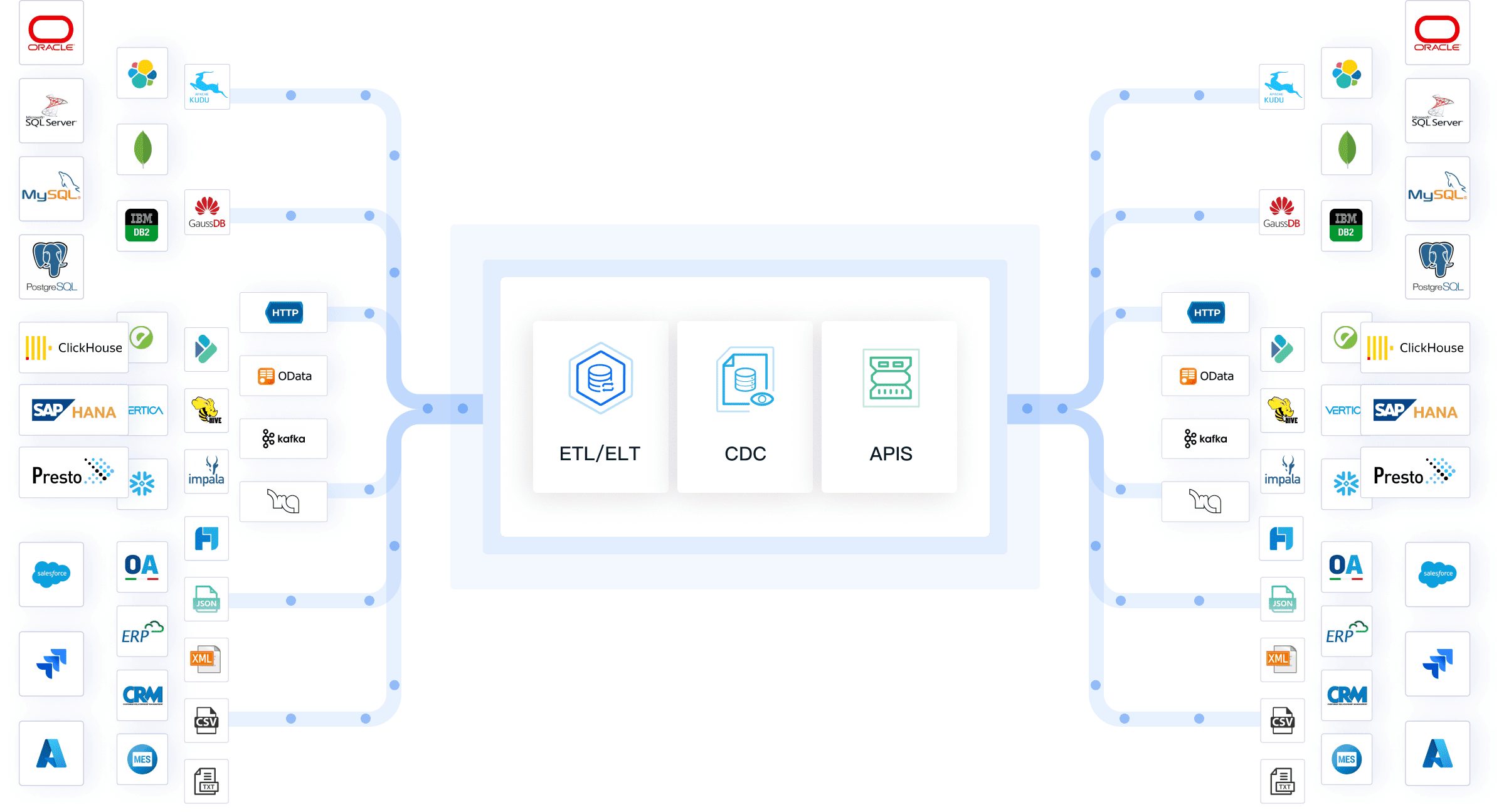

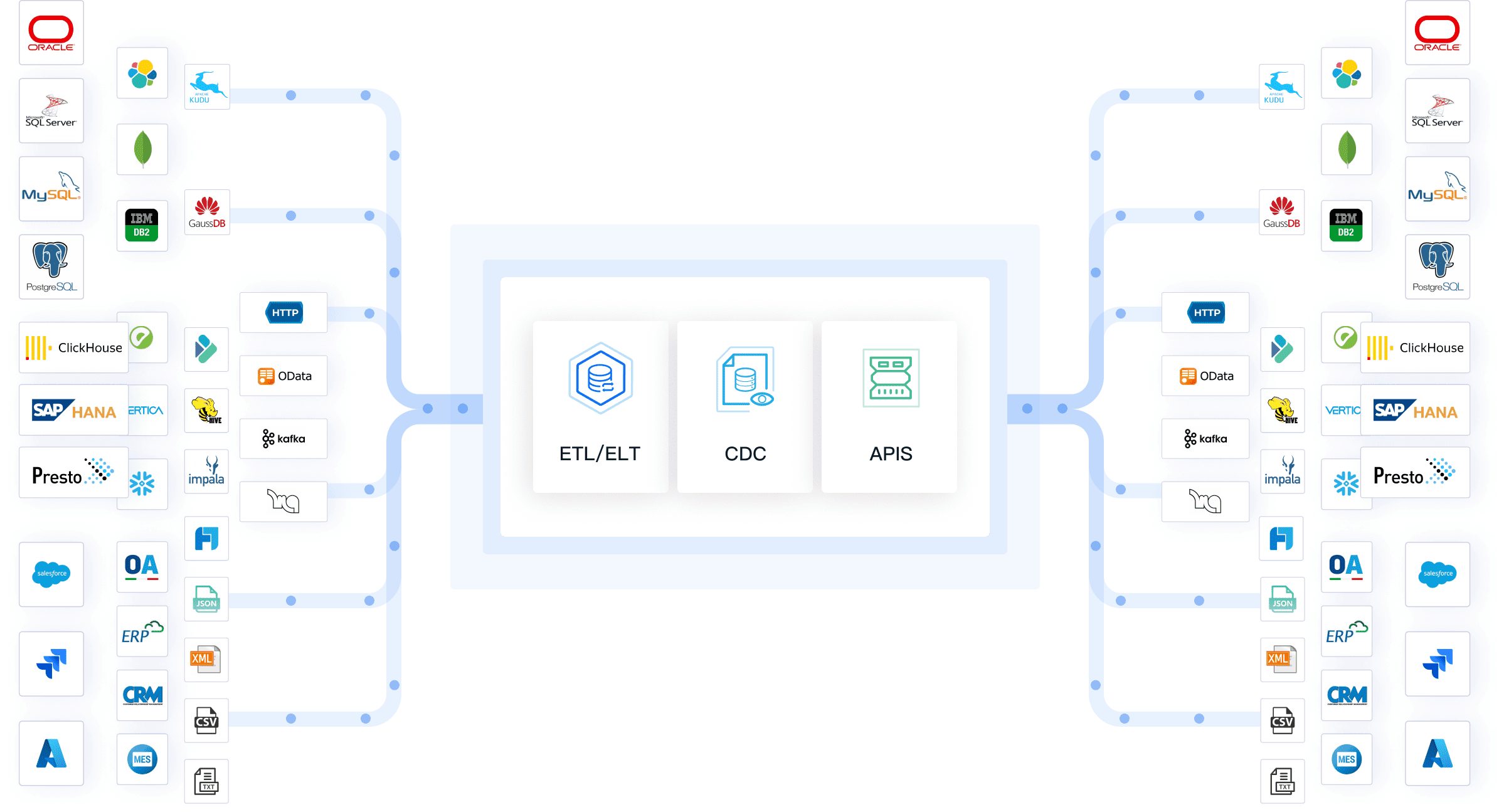

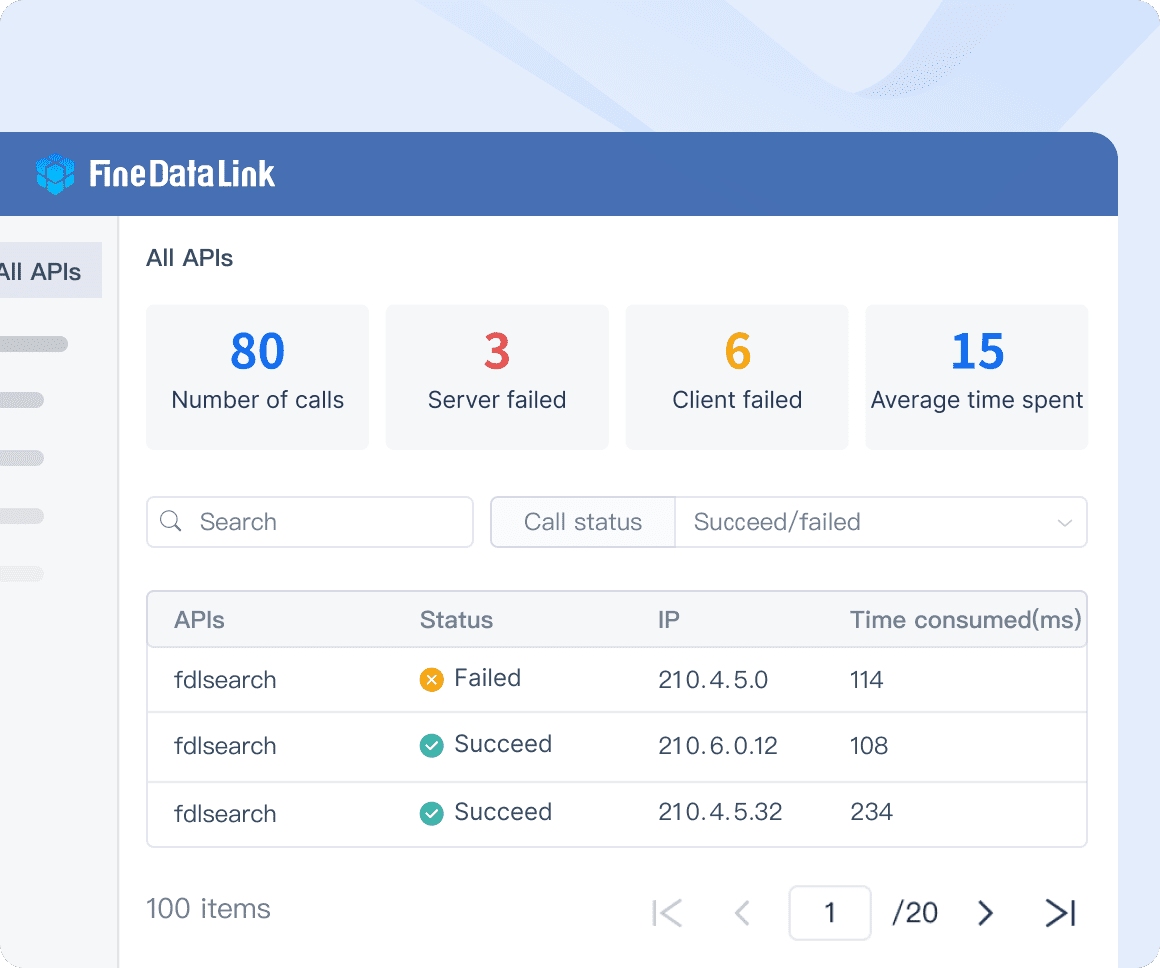

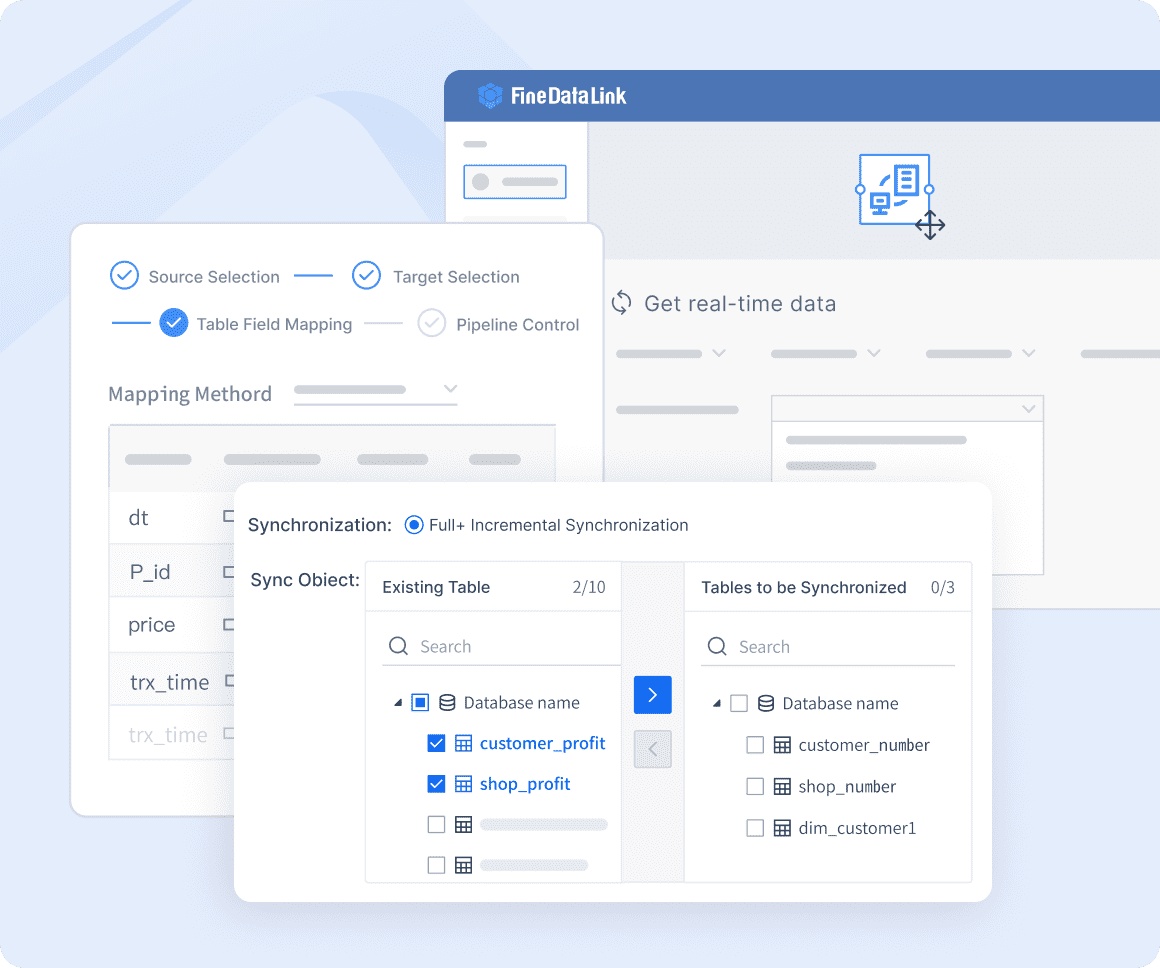

FineDataLink: As a modern and scalable data integration solution, FinedataLink addresses the challenges of data integration, data quality, and data analytics through its three core functions: real-time data synchronization, ETL/ELT, and APIs.

Informatica PowerCenter: A tool known for its comprehensive data integration and transformation features.

Talend Data Fabric: Renowned for its open-source roots and user-friendly interface, making it a preferred choice for many businesses.

IBM InfoSphere DataStage: Recognized for its scalability and ability to handle complex data integration tasks.

Microsoft SQL Server Integration Services (SSIS): A versatile tool that seamlessly integrates with other Microsoft products, simplifying data integration workflows.

These software tools offer a myriad of features and capabilities that streamline the data integration process and enhance operational efficiency, let's take FineDataLink as an example:

Robust Connectivity: FineDataLink provides extensive connectivity options to various data sources, ensuring seamless data ingestion.

Efficient data warehouse construction: The low-code platform streamlines the migration of enterprise data to the data warehouse, relieving computational burdens.

Data Transformation Capabilities: They offer advanced data transformation functionalities, facilitating the conversion of raw data into valuable insights.

Application and API integration: Utilize API data accounting capabilities to cut interface development from 2 days to 5 minutes.

Then, how can data integration tools like FineDataLink solve data integration challenges with its core features?

Cloud-based data integration tools have revolutionized the way organizations approach data management by offering unparalleled advantages:

Several prominent cloud-based data integration tools have garnered acclaim for their innovative solutions and user-centric interfaces:

Open-source data integration tools have emerged as compelling alternatives due to their inherent advantages:

Numerous open-source data integration tools have gained traction for their versatility and collaborative frameworks:

The landscape of data integration is poised for transformation through the adoption of emerging technologies. Innovations such as Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing how organizations approach data consolidation tasks. AI-driven algorithms enhance automation capabilities, optimize data mapping processes, and expedite decision-making by identifying correlations within vast datasets.

Looking ahead, the realm of data integration is forecasted to witness substantial advancements driven by technological innovations and evolving business requirements. Predictive analytics tools are projected to play a pivotal role in forecasting trends, mitigating risks, and capitalizing on opportunities within dynamic market landscapes. Additionally, the proliferation of cloud-based solutions is expected to reshape traditional data integration paradigms by offering scalable platforms for seamless information exchange.

By embracing these future trends proactively and addressing current challenges adeptly, organizations can harness the full potential of data integration to drive growth, innovation, and competitive advantage in an increasingly digitized world.

Empowering organizations to make well-informed decisions, data integration greatly enhances data quality by identifying and rectifying errors and inconsistencies from multiple sources. This results in more reliable and accurate data, facilitating effective analysis and providing valuable insights. Moving forward, embracing emerging technologies like Artificial Intelligence (AI) and Machine Learning (ML) will revolutionize data consolidation tasks, optimizing processes and expediting decision-making for a competitive edge in the digital landscape.

In conclusion, by adhering to these guidelines for choosing and deploying data integration tools, alongside adopting best practices for data integration and management, businesses can effectively leverage the potential of these transformative solutions to address their evolving data requirements. Taking these factors into account, FineDataLink may prove to be your optimal solution.

A data analysis framework is a structured approach to analyzing data that defines the steps, methodologies, tools, and techniques used to gather insights from raw data. It provides a systematic way to approach data problems, ensuring consistency and reliability in analysis. A well-defined framework helps improve the accuracy and effectiveness of the analysis by guiding analysts through the correct processes, from data collection to interpretation. Examples of popular data analysis frameworks include CRISP-DM (Cross-Industry Standard Process for Data Mining) and the data science lifecycle.

There are several types of data analysis frameworks, each serving different analytical purposes. Some of the most common include:

Each framework is suited to different stages of the data analysis process, and selecting the appropriate framework depends on the specific objectives of the analysis.

Organizations can improve data analysis accuracy and effectiveness by carefully selecting and applying the right framework for their objectives. For instance:

Data analysis frameworks provide a systematic approach to gathering, processing, and interpreting data, making it easier for decision-makers to base their actions on evidence rather than intuition. These frameworks help to:

Data analysis frameworks are widely used across various industries to enhance business outcomes:

Click the banner below to experience FineDataLink for free and empower your enterprise to convert data into productivity!

Top Data Integration Tools: 2025 Guide

Top 10 Data Integration Software for 2025

What is API Data Integration? API vs Data Integration

Best Data Integration Platforms to Use in 2025

Enterprise Data Integration: A Comprehensive Guide

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

10 Best Data Orchestration Tools for 2025 You Should Know

Compare the best data orchestration tools for 2025 to streamline workflows, boost automation, and improve data integration for your business.

Howard

Nov 28, 2025

10 Best Enterprise ETL Tools for Data Integration

Compare the 10 best enterprise ETL tools for data integration in 2025 to streamline workflows, boost analytics, and support scalable business growth.

Howard

Oct 02, 2025

What is Real Time Data Integration and Why It Matters

Real time data integration connects systems for instant, accurate data access, enabling faster decisions, improved efficiency, and better customer experiences.

Howard

Sep 24, 2025