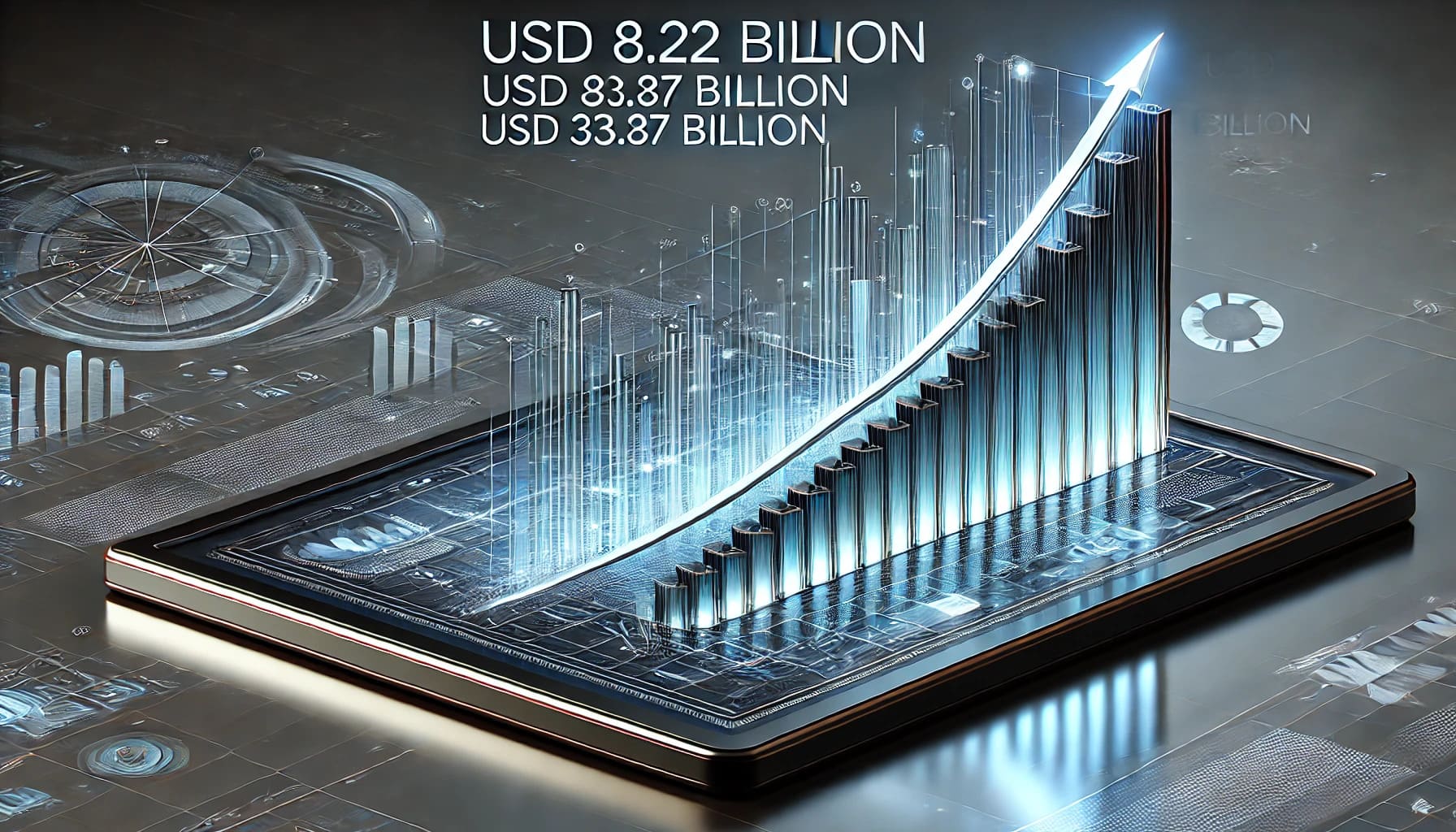

In today's data-driven world, data pipeline tools play a crucial role in shaping modern data strategies. These tools help you manage and streamline the flow of data, ensuring that information is accessible and actionable. The data pipeline market is booming, with projections indicating growth from USD 8.22 billion in 2023 to USD 33.87 billion by 2030. This rapid expansion highlights the increasing demand for efficient data management solutions. As you navigate this evolving landscape, tools like FineDataLink and FineBI offer innovative features to enhance your data strategy and keep you ahead in the competitive market. The following is Top 10 Data Pipeline Tools for 2025 :

In the realm of data management, data pipeline tools serve as the backbone for efficient data handling. These tools ensure that data flows seamlessly from one point to another, enabling you to harness the full potential of your data assets.

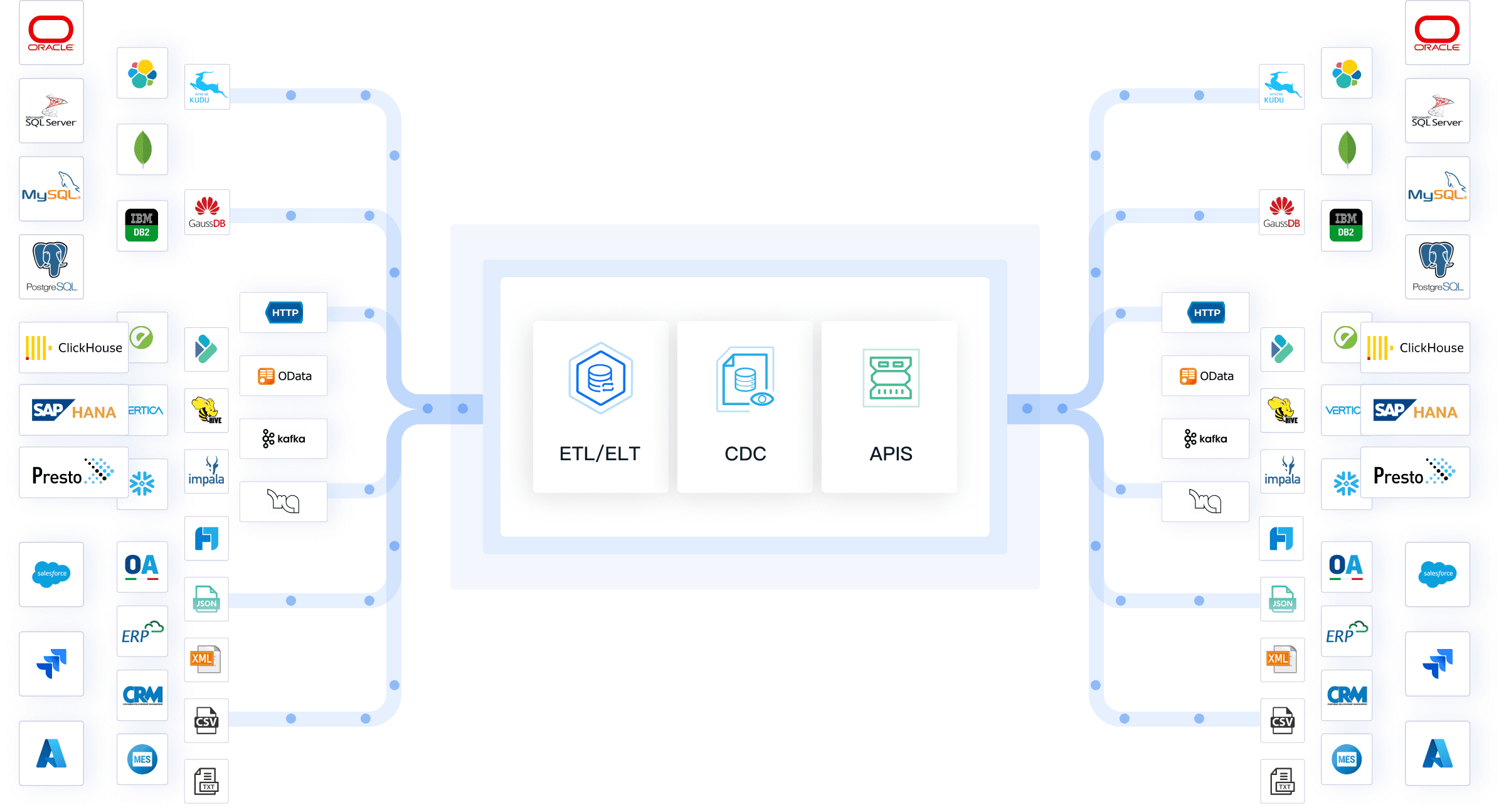

Data pipeline tools are software applications designed to automate the movement and transformation of data between different systems. They facilitate the extraction, transformation, and loading (ETL) of data, ensuring that it reaches its intended destination in a usable format. Unlike traditional ETL tools, data pipeline tools offer a broader scope, managing complex workflows and integrating with various data sources and destinations.

Data pipeline tools are crucial for modern data strategies because they streamline data workflows, improve data quality, and facilitate faster data-driven decisions. By automating data processes, these tools reduce manual intervention, minimize errors, and enhance efficiency. They also support real-time data processing, allowing you to make timely decisions based on the most current information available.

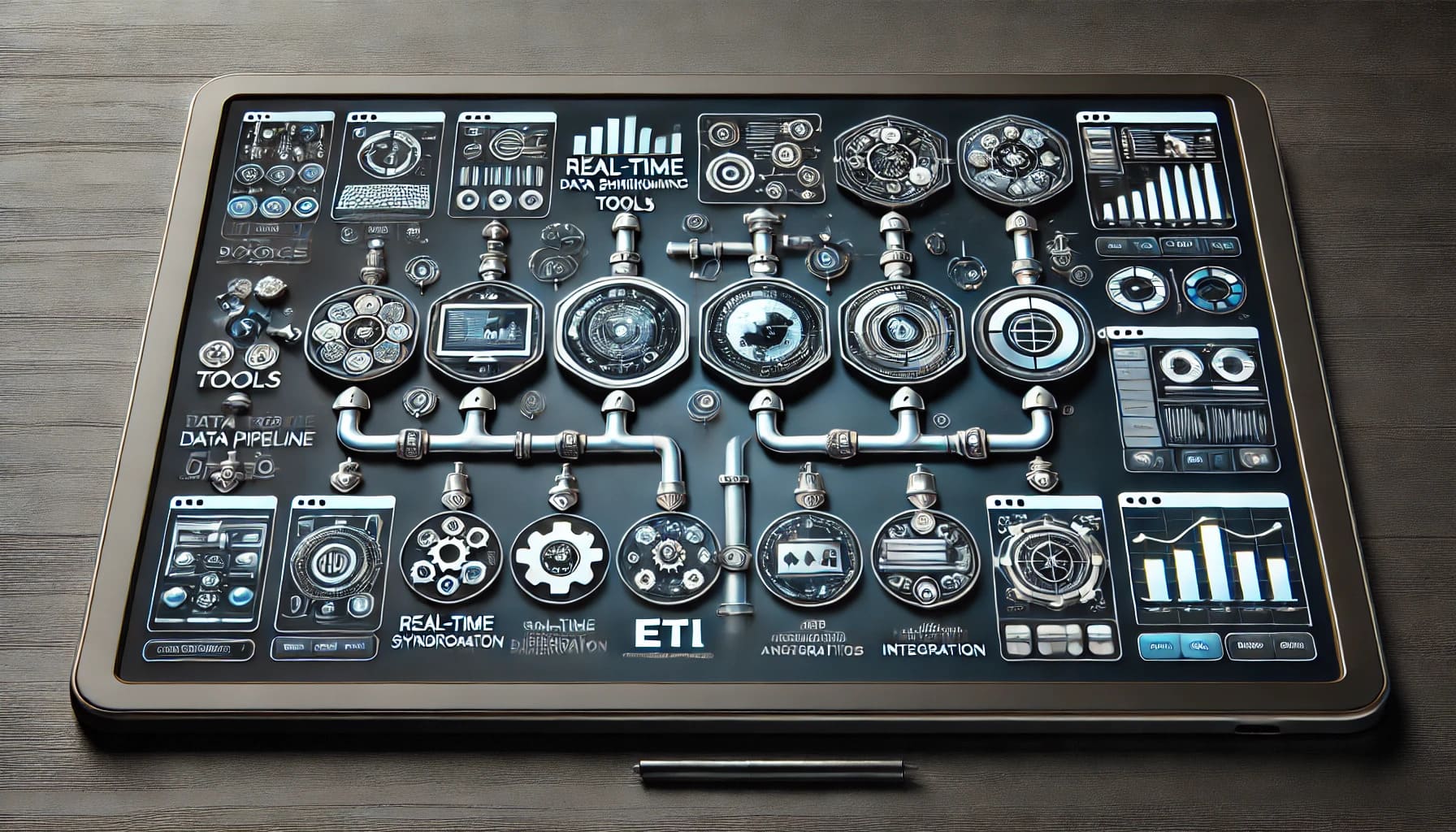

When selecting data pipeline tools, certain features stand out as essential for maximizing their effectiveness.

Scalability is a critical feature to consider. As your data needs grow, your tools must handle increased data volumes without compromising performance. For instance, Google Cloud Dataflow is renowned for its scalability, offering a serverless environment that adapts to your data processing demands.

Integration capabilities determine how well a tool can connect with various data sources and systems. Tools like AWS Glue and Azure Data Factory excel in this area, providing robust integration options for diverse data environments. These tools simplify the process of discovering, preparing, and combining data for analytics and machine learning.

Real-time processing enables you to access and analyze data as it is generated. This feature is vital for businesses that rely on up-to-the-minute insights. Apache Airflow, for example, automates and schedules ETL processes, ensuring that data is processed in real-time, while Google Cloud Dataflow offers a fully managed service for stream and batch data processing.

By understanding these key aspects of data pipeline tools, you can make informed decisions that align with your data strategy and business objectives.

FineDataLink, developed by FanRuan, is a comprehensive data integration platform. It simplifies complex data integration tasks with its low-code approach. This tool is designed to enhance data quality and streamline data processes, making it an ideal choice for businesses seeking efficient data management solutions.

Website: https://www.fanruan.com/en/finedatalink

Pros:

Cons: May require initial setup time to configure integrations.

Talend is an open-source data integration platform that offers a wide range of capabilities. It helps users connect data from various sources and perform ETL or ELT pipelines efficiently. Talend is known for its powerful data integration, quality, and governance features.

Website: https://www.talend.com/

Pros:

Cons: May have a steeper learning curve for beginners.

Apache Airflow is an open-source platform designed for orchestrating complex data workflows. It is widely used for managing ETL processes and Data Monitoring. Airflow provides a flexible way to handle data transformations through code-based approaches.

Website: https://airflow.apache.org/

Pros:

Cons: Requires coding knowledge for performing transformations.

AWS Glue is a fully managed, serverless data integration service offered by Amazon Web Services. It simplifies the process of discovering, preparing, and combining data for analytics, machine learning, and application development. AWS Glue provides a comprehensive set of tools for ETL (Extract, Transform, Load) processes, making it an ideal choice for businesses looking to streamline their data workflows.

Website: https://aws.amazon.com/glue/

Pros:

Cons: May require familiarity with AWS services for optimal use.

Google Cloud Dataflow is a fully managed service for stream and batch data processing. It enables you to develop and execute a wide range of data processing patterns, including ETL, real-time analytics, and machine learning. Google Cloud Dataflow is known for its scalability and flexibility, making it a popular choice for businesses with dynamic data processing needs.

Website: https://cloud.google.com/

Pros:

Cons: May involve a learning curve for users new to Google Cloud.

Microsoft Azure Data Factory is a cloud-based data integration service that allows you to create data-driven workflows for orchestrating data movement and transformation. It provides a platform for building scalable data pipelines, enabling you to integrate data from various sources and transform it into actionable insights.

Website: https://azure.microsoft.com/en-us/products/data-factory

Pros:

Cons: May require initial setup time to configure data connections.

Hevo Data is a no-code data pipeline platform that simplifies the process of integrating and transforming data from various sources. It is designed to automate data workflows, making it easier for businesses to manage their data without extensive technical expertise. Hevo Data supports a wide range of data sources, allowing you to connect and consolidate data effortlessly.

Website: https://hevodata.com/

Pros:

Cons: May have limitations in handling highly complex data transformations.

Rivery is a cloud-based data integration platform that focuses on providing a seamless experience for managing data pipelines. It offers a user-friendly interface that empowers you to build and manage data workflows without the need for extensive coding knowledge. Rivery is known for its flexibility and scalability, making it suitable for businesses of all sizes.

Website: https://rivery.io/

Pros:

Cons: May require initial setup time to configure data models and integrations.

Fivetran is a fully managed data pipeline service that automates data integration. It emphasizes delivering reliable and accurate data by automatically syncing information from various sources to your destination. Designed to navigate the complexities of data integration, Fivetran allows you to concentrate on analyzing and leveraging your data, ensuring data reliability throughout the process

Website: https://www.fivetran.com/

Pros:

Cons: May involve a learning curve for users new to automated data integration platforms.

Stitch Data stands out as a robust ELT (Extract, Load, Transform) tool. It simplifies the process of moving data from various sources to your data warehouse. As part of the Talend family, Stitch Data focuses on providing a seamless experience for data integration. You can rely on it to handle the initial stages of data transformation, ensuring compatibility with your chosen destination. This tool is particularly beneficial for businesses seeking an efficient and straightforward solution for data movement.

Website: https://www.stitchdata.com/

Pros:

Cons: May require additional tools for complex data transformations beyond initial compatibility adjustments.

Stitch Data's focus on ease of use and integration makes it a valuable asset in your data strategy toolkit. By leveraging its capabilities, you can streamline your data workflows and ensure that your data is ready for analysis and decision-making.

Selecting the right data pipeline tools is crucial for optimizing your data strategy. This section will guide you through assessing your data strategy and evaluating tool compatibility to ensure you make informed decisions.

Before choosing a data pipeline tool, you need to understand your data flow. This involves analyzing your data flow and identifying key requirements.

Start by mapping out how data moves within your organization. Identify the sources of your data, such as databases, cloud services, or on-premises systems. Determine how data is processed and where it needs to go. For instance, if you rely on real-time analytics, tools like Google Cloud Dataflow can handle both stream and batch processing efficiently. Understanding your data flow helps you pinpoint the features you need in a data pipeline tool.

Once you understand your data flow, identify the specific requirements for your data pipeline. Consider factors like data volume, processing speed, and integration needs. If you need a tool that supports extensive data transformation, AWS Glue offers robust ETL capabilities with seamless integration into the AWS ecosystem. By clearly defining your requirements, you can narrow down the tools that best fit your needs.

After assessing your data strategy, evaluate how well potential tools integrate with your existing systems and fit within your budget.

Ensure that the data pipeline tool you choose can integrate smoothly with your current infrastructure. Check if it supports the data sources and destinations you use. Azure Data Factory, for example, connects to a wide range of data stores and supports data transformation using various compute services. A tool that integrates well with your systems will streamline your data workflows and reduce the need for additional resources.

Budget is a critical factor when selecting a data pipeline tool. Consider both the initial costs and ongoing expenses. Some tools, like AWS Data Pipeline, offer a pay-as-you-go pricing model, which can be cost-effective for businesses with fluctuating data needs. Evaluate the total cost of ownership, including licensing fees, maintenance, and potential training costs. Balancing your budget with the tool's capabilities ensures you get the best value for your investment.

By carefully assessing your data strategy and evaluating tool compatibility, you can choose the right data pipeline tools that align with your business objectives and enhance your data management processes.

Choosing the right data pipeline tools is crucial for optimizing your data strategy. These tools streamline data workflows, improve data quality, and facilitate faster decisions. You should assess your specific needs and explore the tools discussed, such as Stitch Data, which offers a user-friendly interface and extensive integration options. As you look to the future, anticipate trends like enhanced automation and real-time processing capabilities. By staying informed and adaptable, you can ensure your data strategy remains robust and effective in the evolving landscape of data management.

Click the banner below to experience FineBI for free and empower your enterprise to convert data into productivity!

Mastering Data Pipeline: Your Comprehensive Guide

How to Build a Python Data Pipeline: Steps and Key Points

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

Best Software for Creating ETL Pipelines This Year

Discover the top ETL pipelines tools for 2025, offering scalability, user-friendly interfaces, and seamless integration to streamline your data pipelines.

Howard

Apr 29, 2025

What is Data Pipeline Management and Why It Matters

Data pipeline management ensures efficient, reliable data flow from sources to destinations, enabling businesses to make timely, data-driven decisions.

Howard

Mar 07, 2025

What is a Data Pipeline and Why Does It Matter

A data pipeline automates collecting, cleaning, and delivering data, ensuring accurate, timely insights for analysis and business decisions.

Howard

Mar 07, 2025