Selecting the best data lake providers for enterprise demands careful evaluation of both features and pricing. Enterprises typically compare solutions using criteria such as distributed storage systems, data processing and analytics tools, governance and security capabilities, integration and ETL options, and visualization support. The following table outlines these key criteria:

| Criteria | Description |

|---|---|

| Distributed storage systems | Scalable, fault-tolerant storage using technologies like Hadoop HDFS or Amazon S3. |

| Data processing and analytics tools | Solutions for cleaning, transforming, and aggregating data, such as Apache Spark. |

| Data governance and security tools | Access control and quality assurance tools, including Apache Ranger and AWS IAM. |

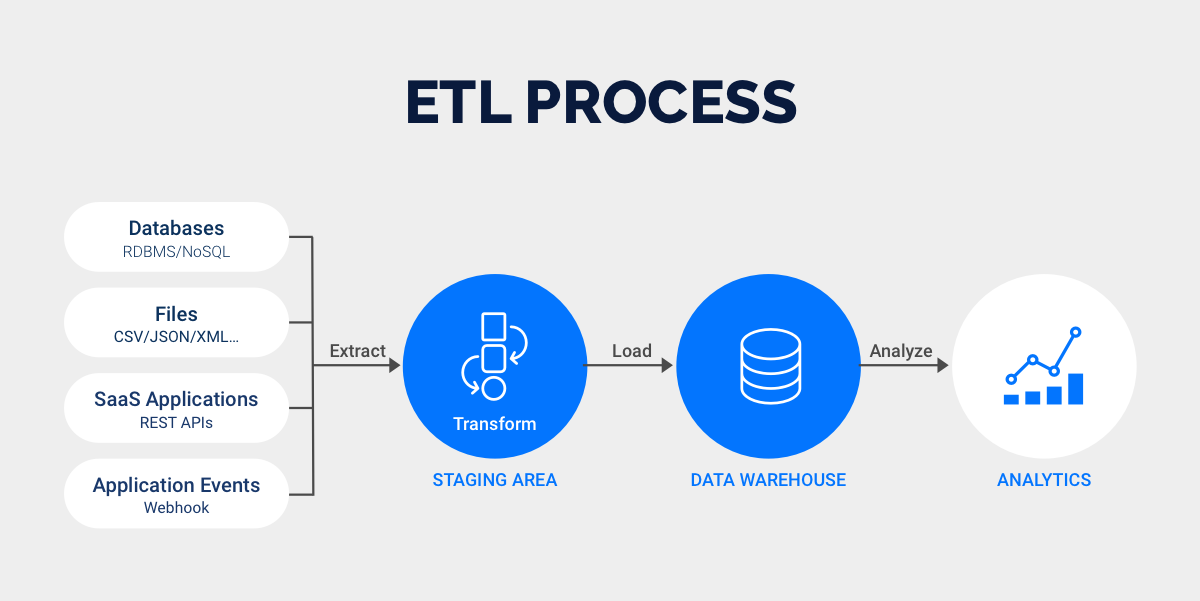

| Data integration and ETL tools | Platforms for extracting, transforming, and loading data, such as Apache NiFi. |

| Data visualization and reporting tools | Dashboard and reporting solutions like Tableau. |

Comparing capabilities and cost structures helps IT decision-makers identify the optimal provider for their business needs. Here are six best data lake providers for enterprise that you can try:

- FineDataLink

- AWS

- Azure Synapse Analytics

- Google Cloud

- Snowflake

- Apache

The Best Data Lake Providers For Enterprise

Provider list

Selecting the best data lake providers for enterprise requires a comprehensive review of the most frequently recommended platforms in recent industry reports and search results. Enterprises often consider a range of solutions to meet their data management needs. The following providers consistently appear in top recommendations:

- FineDataLink

- Amazon Web Services (AWS) Data Lake

- Microsoft Azure Data Lake Storage

- Google Cloud Platform (BigLake)

- Snowflake Cloud Data Platform

- Apache Iceberg

- Cloudera Data Platform (CDP)

- Databricks Unified Analytics Platform

- IBM Db2

- Oracle Database

These platforms offer robust capabilities for distributed storage, analytics, and integration, making them strong candidates among the best data lake providers for enterprise.

Key features

The best data lake providers for enterprise deliver a set of core features that support scalability, flexibility, and advanced analytics. Providers differentiate themselves through unique selling points and technical strengths. The following table summarizes standout features for leading platforms:

| Platform | Unique Selling Points |

|---|---|

| FineDataLink | Low-code integration, real-time data synchronization, advanced ETL/ELT, drag-and-drop interface, API integration, supports 100+ data sources |

| AWS Data Lake | AI integration (SageMaker), robust security (encryption at rest/in transit), simplified setup (Lake Formation) |

| Azure Data Lake Storage | Comprehensive AI support, effortless scalability, cost-effective storage options |

| Google Cloud BigLake | Multi-cloud support, strong AI/ML integration, unified data management |

| Snowflake | Multi-cloud flexibility, independent scaling of storage/compute, advanced analytics |

| Apache Iceberg | Open table format, high performance for large-scale analytics, compatibility with multiple engines |

Most leading providers offer elastic storage, schema-on-read, multi-tenancy, and integrated analytics. These features enable organizations to store and analyze data efficiently, supporting both structured and unstructured formats.

Pricing models

Pricing models play a critical role when evaluating the best data lake providers for enterprise. Providers offer several approaches to cost structure and flexibility. The following table outlines common pricing models:

| Pricing Model | Cost Structure Description | Flexibility Characteristics |

|---|---|---|

| Subscription Pricing | Stable and predictable costs, suitable for steady workloads | May lack dynamic scaling for fluctuating workloads |

| Tiered Pricing | Structured in levels, allows companies to start small and upgrade as needed | Flexible but may require monitoring as usage grows |

| Flat-Rate Pricing | Fixed cost for predefined resources, minimizes unexpected expenses | Less cost-effective for variable demand, may pay for unused resources |

FineDataLink provides affordable pricing with options for free trials and demos, making it accessible for organizations seeking cost-effective solutions. AWS, Azure, and Google Cloud typically use tiered or pay-as-you-go models, allowing enterprises to scale resources based on demand. Snowflake offers consumption-based pricing, enabling independent scaling of compute and storage. Apache Iceberg, as an open-source solution, does not charge licensing fees but may incur infrastructure costs.

Enterprises should assess their workload patterns and growth projections to select the most suitable pricing model among the best data lake providers for enterprise.

FineDataLink as The Best Data Lake Providers For Enterprise

Features

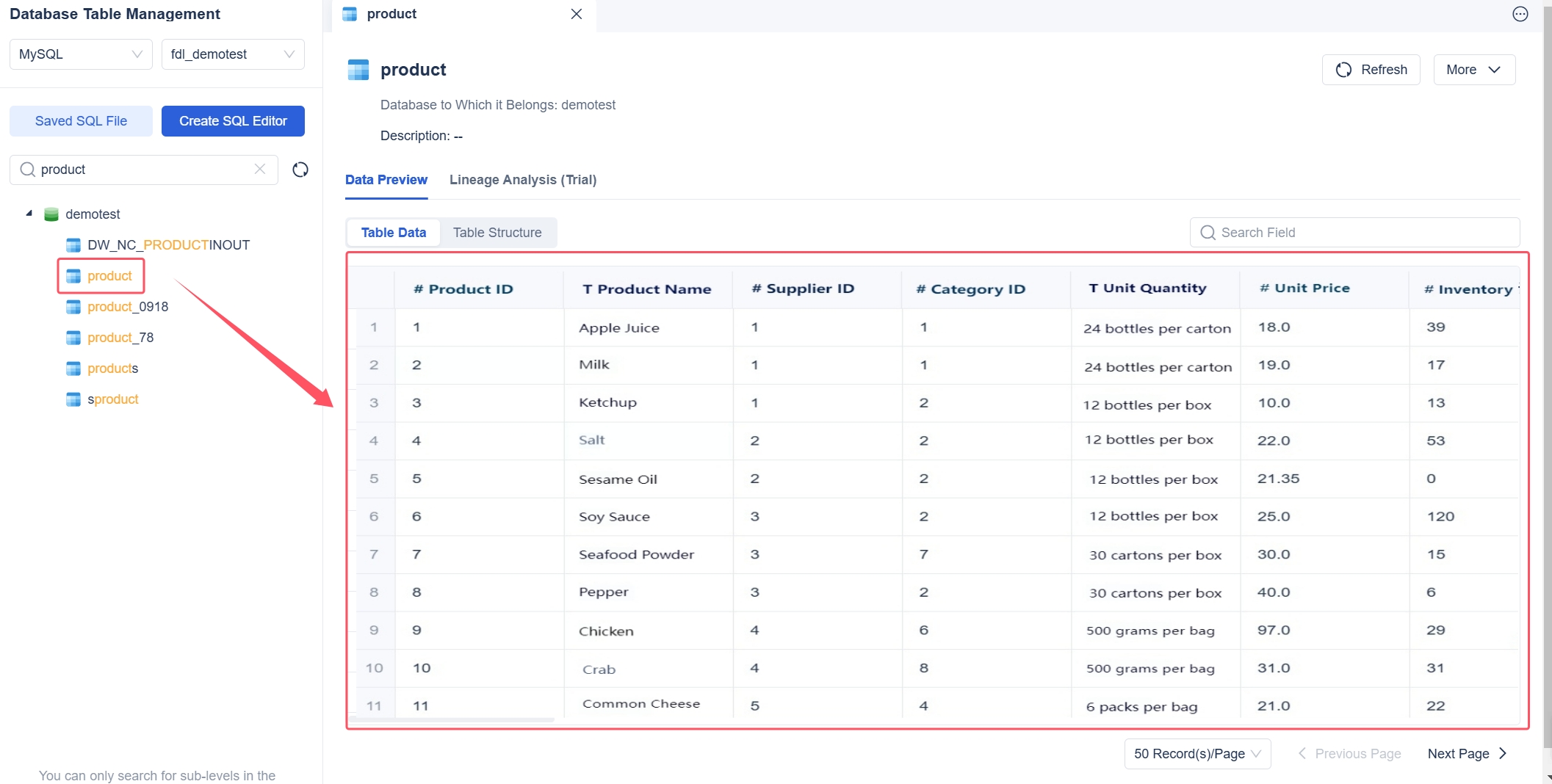

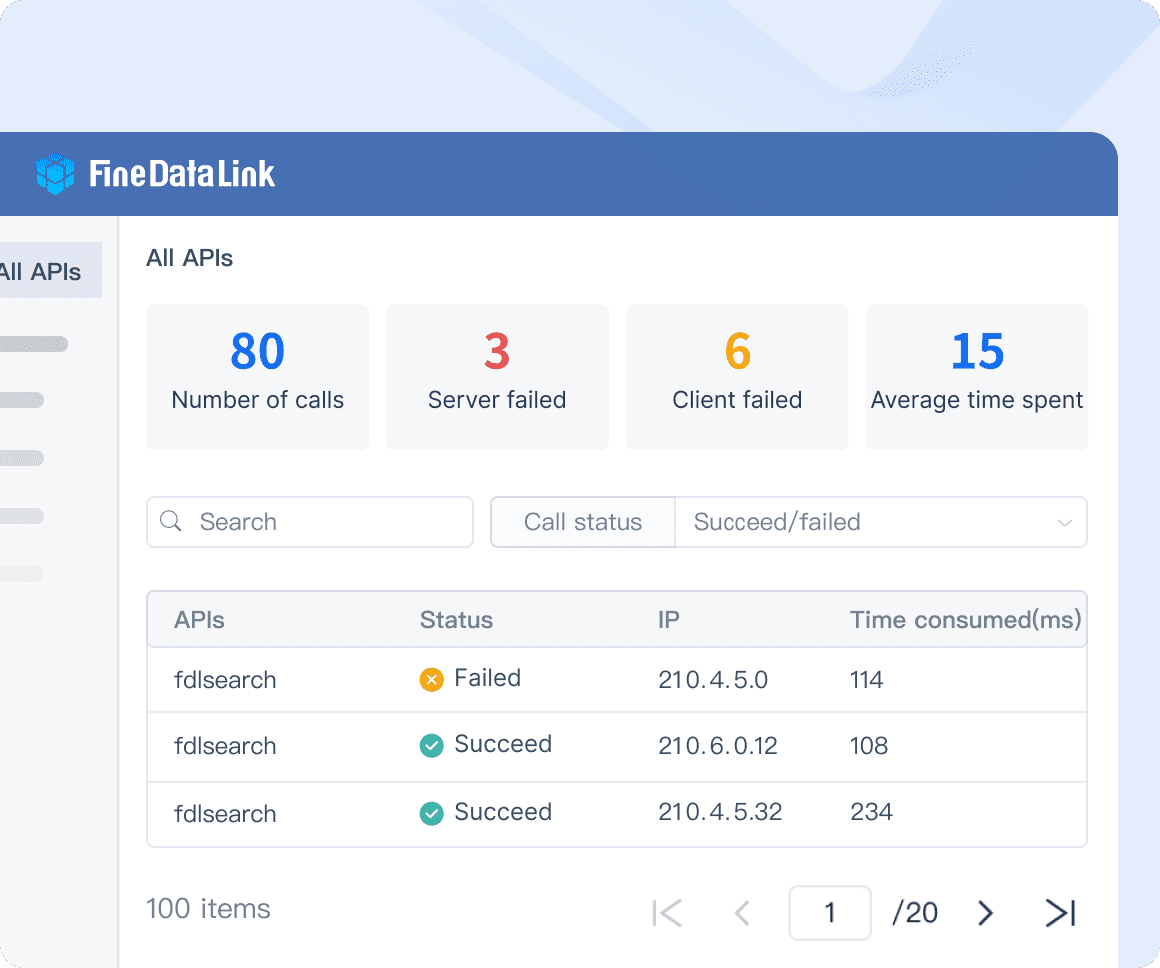

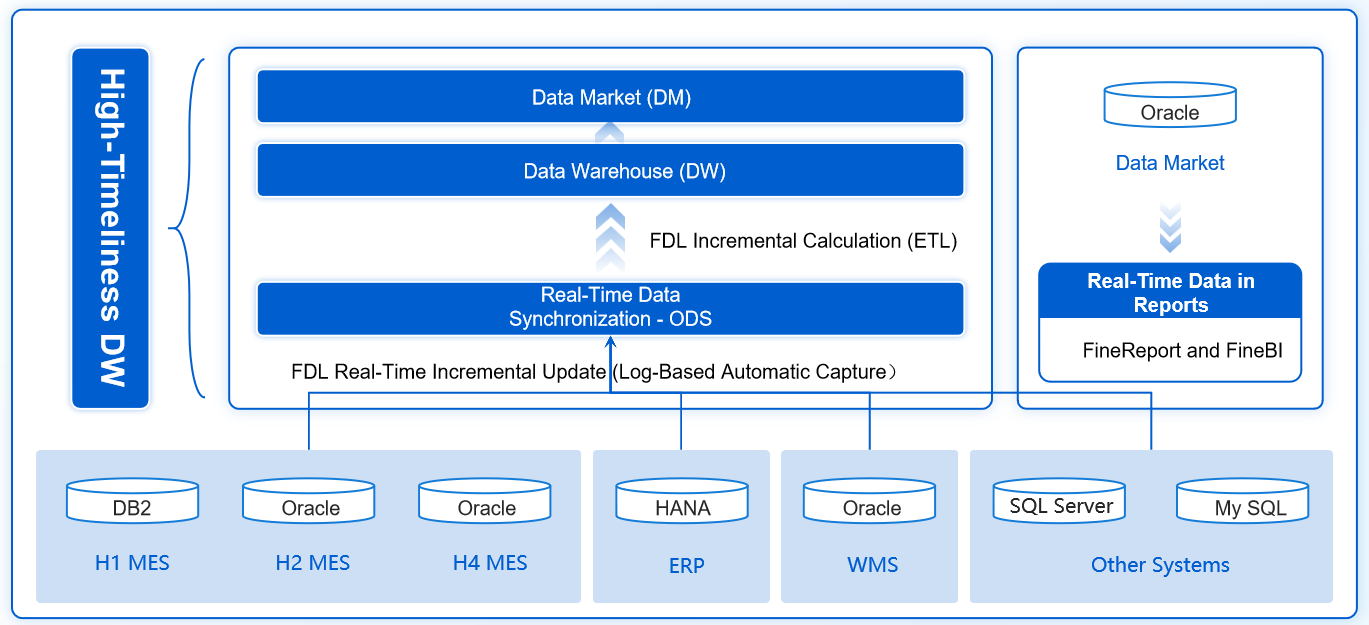

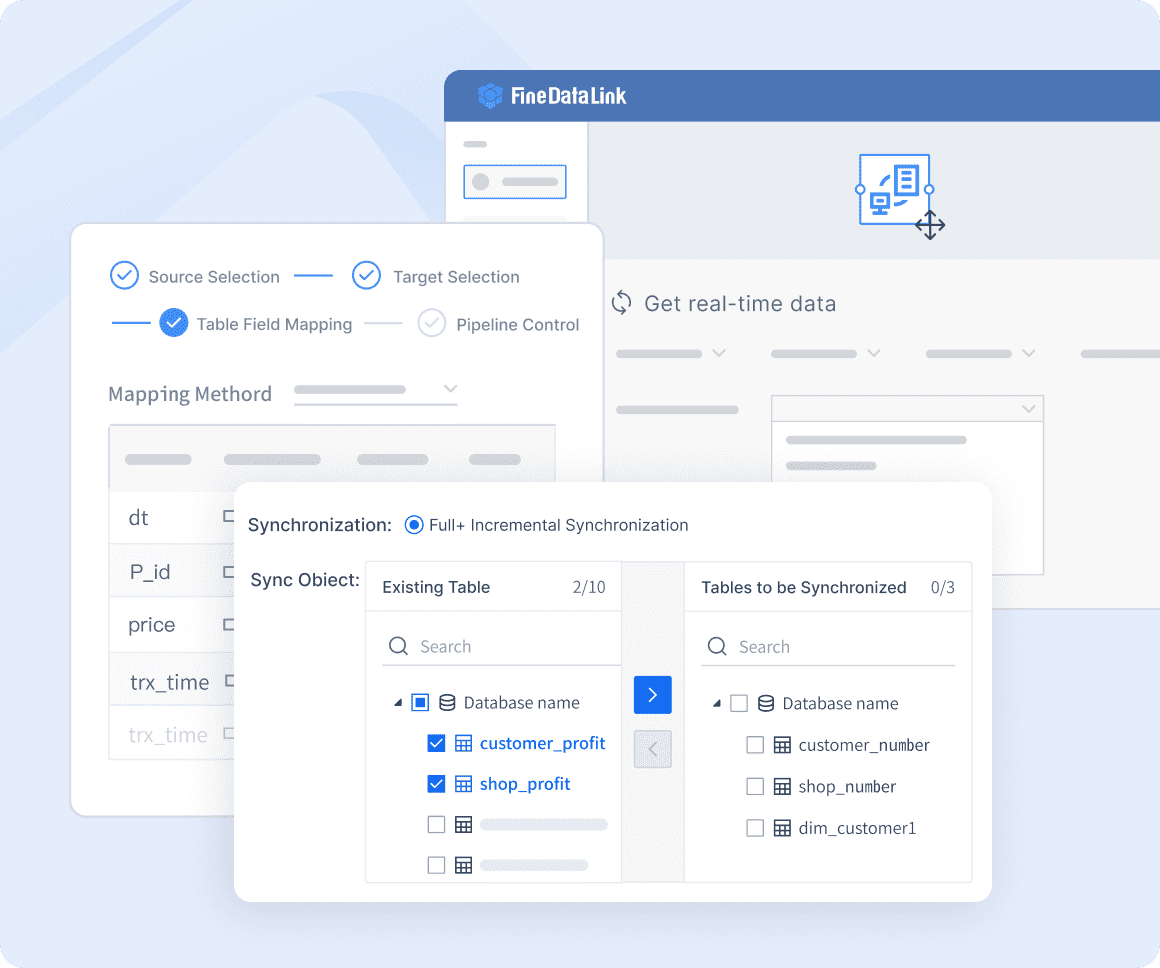

FineDataLink stands out among the best data lake providers for enterprise due to its comprehensive feature set. The platform offers a low-code environment that simplifies complex data integration tasks. Users benefit from real-time data synchronization, which enables seamless updates across multiple tables with minimal latency. The drag-and-drop interface allows data engineers and business intelligence teams to build data pipelines efficiently. FineDataLink supports advanced ETL and ELT processes, making it suitable for both offline and real-time data warehouse construction. The platform integrates with over 100 common data sources, including SaaS applications and cloud environments. API integration capabilities allow organizations to share data between systems quickly, often within minutes. Detailed documentation and step-by-step instructional videos further enhance usability for technical and non-technical users.

| Feature | Description |

|---|---|

| Low-code platform | Simplifies integration with visual tools and minimal coding |

| Real-time sync | Enables instant data updates across systems |

| ETL/ELT support | Facilitates data transformation and warehouse development |

| API integration | Allows rapid data sharing between applications |

| 100+ data sources | Connects to diverse databases and SaaS platforms |

| User-friendly interface | Drag-and-drop design and comprehensive documentation |

Pricing

FineDataLink provides flexible and affordable pricing options for enterprises. Organizations can request a free trial or demo to evaluate the platform before committing. The pricing structure is designed to accommodate businesses of various sizes, ensuring cost-effectiveness for both small teams and large enterprises. FineDataLink does not charge for unused resources, which helps companies manage budgets efficiently. The platform’s transparent pricing model allows IT decision-makers to forecast expenses with confidence. Enterprises seeking a scalable solution often find FineDataLink’s pricing competitive compared to other providers in the market.

Tip: Enterprises can contact FineDataLink directly for a customized quote based on specific integration and data volume requirements.

Use cases

FineDataLink addresses several common enterprise challenges in data management and analytics. Data engineers use the platform to eliminate data silos and automate ETL workflows. Business intelligence teams leverage real-time synchronization to build responsive dashboards and reports. Application developers integrate APIs to connect legacy systems with modern SaaS platforms. Organizations deploy FineDataLink for database migration, backup, and governance, ensuring high data quality and compliance. The platform supports both offline and real-time data warehouse construction, making it suitable for industries such as finance, retail, and healthcare.

- Real-time data warehouse construction for business analytics

- Automated ETL/ELT workflows for efficient data processing

- API integration for seamless connectivity between applications

- Data governance and quality assurance for compliance

- Database migration and backup for operational resilience

FineDataLink's versatility and ease of use position it as a top choice among the best data lake providers for enterprise.

AWS as The Best Data Lake Providers For Enterprise

Features

AWS stands out as one of the best data lake providers for enterprise environments. The platform delivers a robust set of features that support large-scale data management and analytics. AWS Data Lakes scale effortlessly, handling data volumes from terabytes to petabytes. Enterprises benefit from cost-effective storage and analysis through services such as Amazon S3 and Amazon Athena. The platform supports structured, unstructured, and semi-structured data, offering flexibility for diverse business needs. AWS Lake Formation and AWS Glue provide strict governance policies, protecting sensitive information and controlling access. Security remains a priority, with encryption and access controls ensuring compliance with industry standards. Integration with other AWS services, including Amazon Redshift, SageMaker, and QuickSight, enhances analytics and visualization capabilities.

| Feature | Description |

|---|---|

| Scalability | AWS Data Lakes can effortlessly scale to accommodate any size and type of data, from terabytes to petabytes. |

| Cost-Effectiveness | Utilizing services like Amazon S3 and Amazon Athena allows for storage and analysis at a lower cost than traditional data warehouses. |

| Flexibility | Supports various data types, including structured, unstructured, and semi-structured data, allowing for comprehensive data storage. |

| Data Governance | AWS Lake Formation and AWS Glue enable strict governance policies to protect sensitive data and control access. |

| Security | Offers robust security features such as encryption and access controls to safeguard data and ensure compliance. |

| Integration with AWS Services | Seamless integration with services like Amazon Redshift, SageMaker, and QuickSight enhances analytics and visualization capabilities. |

Pricing

AWS uses a pay-as-you-go pricing model for its data lake services. Enterprises pay only for the resources they use, which helps control costs and scale operations efficiently. Storage costs depend on the amount of data stored in Amazon S3, while analysis costs relate to the queries run in Amazon Athena or Redshift. AWS offers tiered pricing for larger volumes, providing discounts as usage increases. Organizations can estimate expenses using the AWS Pricing Calculator, which helps forecast costs based on projected workloads. This flexible pricing structure suits businesses with variable data needs and supports both small teams and large enterprises.

Note: AWS provides detailed documentation and support resources to help organizations optimize their data lake spending.

Use cases

AWS data lake solutions address a wide range of enterprise requirements. Businesses use AWS for analytics on large datasets, improving decision-making and identifying opportunities. Regulatory compliance becomes manageable with secure storage and governance features. Companies analyze clickstream data from websites to understand user behavior and optimize engagement. IoT data processing enables pattern detection and performance optimization. Machine learning and AI initiatives leverage AWS to build and train models using extensive datasets. Real-time analytics allow organizations to respond quickly to events. Self-service analytics empower users to explore and visualize data independently.

| Use Case | Description |

|---|---|

| Business Intelligence | Perform analytics on large datasets to improve decision-making and identify opportunities. |

| Regulatory Compliance | Store and manage data to meet regulatory requirements like GDPR. |

| Clickstream Analysis | Analyze user behavior from website logs for insights into engagement and conversion rates. |

| IoT Data Processing | Ingest and analyze data from IoT devices to detect patterns and optimize performance. |

| Machine Learning and AI | Build and train models using large datasets for predictive analytics and AI-driven decisions. |

| Real-time Analytics | Process streaming data for timely insights and responses to events. |

| Data Exploration | Enable self-service analytics for users to explore and visualize data. |

AWS continues to serve as a reliable choice for enterprises seeking scalable, secure, and flexible data lake solutions.

Azure as The Best Data Lake Providers For Enterprise

Features

Azure Data Lake stands as a leading choice among the best data lake providers for enterprise environments. The platform offers a robust set of features designed to meet the demands of large organizations. Azure Data Lake operates as a server-less solution, which automates management and streamlines operations. Enterprises benefit from high scalability and secure storage, supporting analytics workloads without creating data silos. Cost optimization features include tiered storage and policy management, allowing organizations to control expenses. Role-based access controls and single sign-on capabilities enhance security and simplify user management. On-demand analytics enable users to run parallel data processing programs and pay only for the jobs they execute. Cluster management through Azure HDInsight supports various frameworks, making data processing more flexible. Microsoft manages the platform, providing governance, encryption, and security features tailored for enterprise needs.

| Feature | Description |

|---|---|

| Server-less | Automatic management of data lake formation and operations |

| Highly Scalable and Secure | High-performance analytics workloads, eliminates data silos |

| Cost Optimization | Tiered storage and policy management for cost efficiency |

| Role-based Access Controls | Secure data management with role-based access and single sign-on |

| On-demand Analytics | Parallel data processing, pay per job |

| Cluster Management | Simplified cluster management, supports multiple data processing frameworks |

| Enterprise Features | Governance, encryption, and security managed by Microsoft |

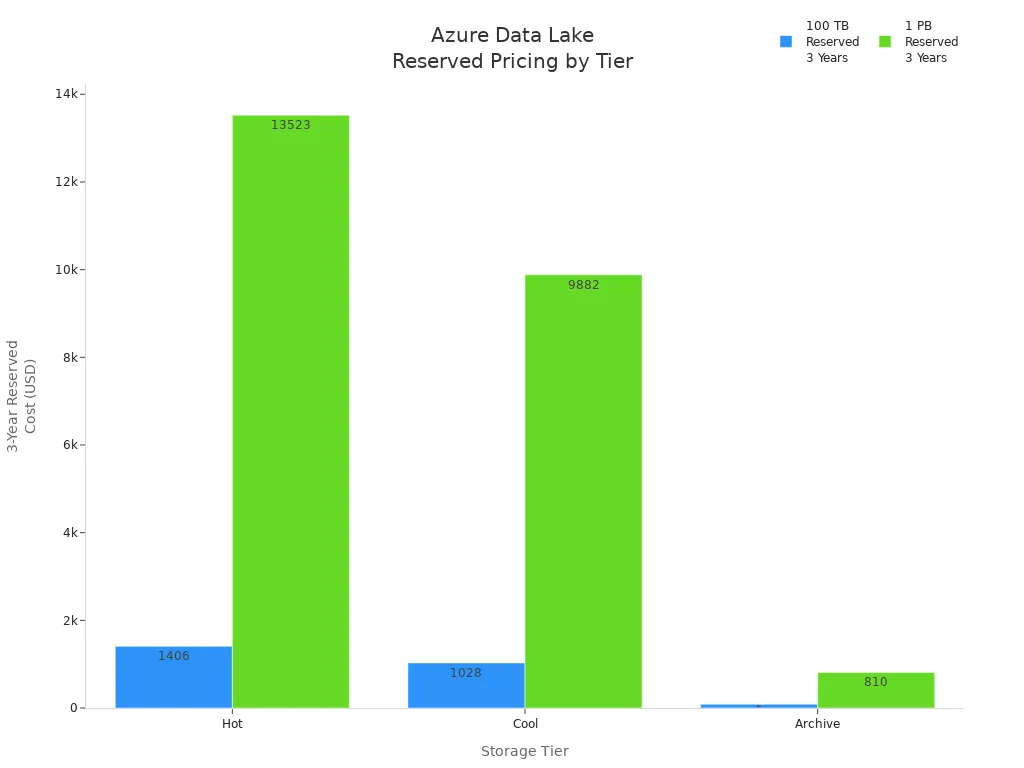

Pricing

Azure Data Lake provides a transparent and flexible pricing model. Organizations can select from Hot, Cool, and Archive storage tiers, each offering different cost structures. The Hot tier costs $0.15 per GB per month, while the Cool tier starts at $0.0208 for the first 50 TB and drops to $0.0152 for usage over 500 TB. The Archive tier offers the lowest price at $0.0152 per GB per month. Reserved pricing for three years ranges from $1,406 for 100 TB in the Hot tier to $810 for 1 PB in the Archive tier. The platform charges for write and read operations, with Premium Tier write operations at $0.228 per 10,000 and Archive Tier at $0.13. Read operations cost $0.00182 per 10,000 for Premium and up to $6.5 for Archive. Data retrieval is free for Premium and Hot tiers, $0.01 per GB for Cool, and $0.02 for Archive. Azure Data Lake Analytics uses a pay-per-job model, allowing clients to define computational power through Analytics Units (AUs) and pay based on monthly reservations.

| Storage Tier | Cost per GB / Month | Reserved Cost for 3 Years (100 TB) | Reserved Cost for 3 Years (1 PB) |

|---|---|---|---|

| Hot | $0.15 | $1,406 | $13,523 |

| Cool | $0.0208 (first 50 TB), $0.0152 (over 500 TB) | $1,028 | $9,882 |

| Archive | $0.0152 | $84 | $810 |

Azure Data Lake Analytics simplifies cost management by allowing enterprises to pay only for the jobs they run. Clients can adjust computational resources as needed, optimizing both performance and expenses.

Use cases

Azure Data Lake supports a wide range of enterprise applications across industries. Healthcare organizations analyze electronic health records and medical imaging data to improve patient care. Financial services firms use the platform for real-time fraud detection by processing transaction data. Retailers optimize inventory management and design targeted marketing campaigns using customer data. Manufacturers predict machine failures by analyzing sensor data from equipment. Transportation companies leverage telemetry data to optimize routes and reduce fuel consumption.

- Healthcare: Analyzing electronic health records and medical imaging data to improve patient care.

- Financial services: Real-time fraud detection through transaction data analysis.

- Retail: Optimizing inventory management and creating targeted marketing campaigns based on customer data.

- Manufacturing: Predicting machine failures by analyzing sensor data from equipment.

- Transportation: Optimizing routes and reducing fuel consumption through telemetry data analysis.

Azure Data Lake delivers scalable, secure, and cost-effective solutions for organizations seeking advanced analytics and data management capabilities.

Google Cloud as The Best Data Lake Providers For Enterprise

Features

Google Cloud offers a scalable and secure platform for enterprise data lake solutions. Organizations ingest data from various systems with ease. The platform stores any type or volume of data in full fidelity, supporting structured, semi-structured, and unstructured formats. Real-time and batch data processing capabilities allow businesses to manage data efficiently. Google Cloud supports analysis using multiple programming languages and third-party applications. Object storage provides practically limitless capacity, enabling companies to scale up or down based on business requirements. The platform simplifies management compared to traditional storage solutions, reducing operational overhead.

- Scalable and secure data ingestion from diverse sources

- Storage of all data types and volumes with full fidelity

- Real-time and batch processing options

- Support for multiple programming languages and third-party tools

- Object storage with virtually unlimited capacity

- Flexible scaling to meet changing business needs

- Simplified management for enterprise environments

Pricing

Google Cloud data lake services utilize a pay-as-you-go pricing model. Enterprises avoid significant upfront infrastructure costs, paying only for the resources they use. This approach supports dynamic scaling, allowing organizations to adjust storage and compute based on demand. The pricing structure benefits businesses with fluctuating workloads, providing cost efficiency and transparency. Companies can estimate expenses using Google Cloud’s pricing calculator, which helps forecast costs for storage, data processing, and analytics.

- Pay-as-you-go pricing eliminates upfront infrastructure investment

- Charges based on actual resource usage

- Supports dynamic scaling for variable workloads

- Transparent cost estimation tools available

Tip: Enterprises should monitor usage patterns to optimize spending and leverage Google Cloud’s cost management features.

Use cases

Enterprises deploy Google Cloud data lake solutions for a variety of applications. The platform enables efficient and scalable data storage, designed for processing and securing stored data. Companies aggregate data from multiple sources, centralizing diverse datasets for unified analysis. Real-time analytics become possible, supporting rapid decision-making. Organizations create machine learning models using centralized data, enhancing predictive capabilities. Google Cloud ingests data from social media, IoT devices, and transactional systems, providing a single source of truth for the organization.

- Efficient and scalable storage for enterprise data

- Processing and securing data from multiple sources

- Centralizing diverse datasets for unified analysis

- Real-time analytics for rapid insights

- Machine learning model development using centralized data

- Ingesting data from social media, IoT, and transactional systems

Google Cloud remains a strong contender among the best data lake providers for enterprise, offering robust features, flexible pricing, and versatile use cases.

Snowflake as The Best Data Lake Providers For Enterprise

Features

Snowflake consistently ranks among the best data lake providers for enterprise feature and pricing comparison. The platform offers a fully managed, multi-cluster shared data architecture. Snowflake separates compute resources from storage using virtual warehouses, which enables high query performance through automatic clustering and micro-partitioning. Enterprises benefit from advanced data quality monitoring, including freshness, volume, and schema drift detection. The governance framework includes differential privacy features and extended classification capabilities for global compliance. Snowflake provides dynamic data masking, cross-account sharing, and comprehensive audit logging. Data lineage tracking supports transparency and accountability. The platform ensures security and scalability, supports semi-structured data, and requires almost no administration. Snowflake adheres to industry standards such as SOC 2 Type II, HIPAA, GDPR, and CCPA. Features like data encryption, multi-factor authentication, and role-based access control enhance compliance and data protection.

Pricing

Snowflake uses a usage-based pricing model, which distinguishes it in the best data lake providers for enterprise feature and pricing comparison. Costs depend on actual usage of compute, storage, and cloud services. Pricing varies by edition, hosting region, cloud provider, and available discounts. The following table summarizes the cost per credit for each edition:

| Edition | Cost Per Credit (USD) |

|---|---|

| Standard | $2.00 - $3.10 |

| Enterprise | $3.00 - $4.65 |

| Business Critical | $4.00 - $6.20 |

| VPS (Virtual Private Snowflake) | $6.00 - $9.30 |

Snowflake allows organizations to select the edition that matches their requirements. Enterprises can optimize costs by monitoring usage and leveraging discounts. The flexible pricing model supports both small teams and large organizations.

Use cases

Snowflake addresses a wide range of enterprise needs, making it a top choice in the best data lake providers for enterprise feature and pricing comparison. The platform excels in data warehousing and analytics, enabling clients to focus on data value rather than technical issues. Snowflake supports large volumes of structured and semi-structured data, which improves report generation and insights. Enterprises use Snowflake for data lakes and multi-structured data processing, simplifying the handling of diverse formats and allowing direct querying of raw data. This reduces bottlenecks and enhances accessibility. For example, a mid-sized ecommerce company centralized data from multiple sources into Snowflake, resulting in faster insights and accurate forecasting. A healthcare provider streamlined analysis by ingesting various data formats directly, reducing preprocessing time and improving governance.

| Use Case | Description |

|---|---|

| Data warehousing and analytics | Enables efficient report generation and insight discovery from large volumes of structured data. |

| Data lakes and multi-structured data processing | Simplifies handling and querying of diverse data formats, improving accessibility and reducing bottlenecks. |

Snowflake’s versatility and robust feature set position it as a leader among the best data lake providers for enterprise feature and pricing comparison.

Apache Iceberg as The Best Data Lake Providers For Enterprise

Features

Apache Iceberg has emerged as a leading solution in the best data lake providers for enterprise feature and pricing comparison. Enterprises rely on its robust architecture to manage large-scale datasets with high reliability. The platform delivers transaction consistency through point-in-time snapshot isolation, supporting multiple independent applications that process the same dataset simultaneously. Iceberg enables efficient updates to massive tables, simplifying ETL pipelines by allowing data operations in place. Organizations benefit from improved data management as datasets evolve, with full schema evolution and time travel capabilities that track changes over time. Iceberg supports rollback to prior versions, helping teams correct issues quickly. Advanced planning and filtering features enhance query performance. The platform also provides expressive SQL commands for merging, updating, and deleting data, file-level tracking for atomic changes, and hidden partitioning to simplify query execution.

- Transaction consistency with snapshot isolation

- Support for simultaneous processing by multiple applications

- Efficient updates for large-scale tables

- Simplified ETL pipelines operating on data in place

- Full schema evolution and time travel for historical queries

- Rollback to prior versions for rapid issue correction

- Advanced planning and filtering for performance

- Expressive SQL commands for data management

- File-level tracking and hidden partitioning

Pricing

In the best data lake providers for enterprise feature and pricing comparison, Apache Iceberg stands out for its flexible and cost-effective approach. The platform allows organizations to scale storage and compute resources independently, which leads to significant cost savings. Iceberg simplifies data management by reducing the need for multiple data copies across different warehouses, decreasing operational costs and improving efficiency. Enterprises can optimize their data warehouse costs by leveraging Iceberg’s architecture, which supports pairing with various cloud-native compute engines. This adaptability helps organizations avoid vendor lock-in and negotiate better pricing. Moving data to Iceberg consolidates storage, lowers complexity, and enables direct access for experimentation with different tools. The independent scaling of storage and compute layers in a lakehouse architecture provides flexibility in resource planning and cost control.

- Independent scaling of storage and compute resources

- Reduced operational costs through data consolidation

- Elimination of vendor lock-in for better pricing negotiation

- Adaptability with cloud-native compute engines

- Lower complexity and direct data access for experimentation

Use cases

Organizations evaluating the best data lake providers for enterprise feature and pricing comparison often choose Apache Iceberg for its versatility in handling complex data management scenarios. Iceberg supports schema evolution, allowing modification of table structures without disrupting existing queries. The time travel feature enables historical analysis by querying data as it existed at specific points in time. Efficient data processing automates complex workflows, improving query performance and reducing errors. Enterprises in regulated industries use Iceberg for compliance and auditing, as it supports robust data auditing and meets regulatory requirements. Companies like Netflix utilize Iceberg to manage large datasets efficiently, leveraging time travel for tracking changes and verifying compliance.

| Use Case | Description |

|---|---|

| Schema Evolution | Modify table structures without disrupting existing queries. |

| Time Travel | Query historical data for analysis and compliance. |

| Efficient Data Processing | Automate workflows to improve performance and reduce errors. |

| Compliance and Auditing | Support data auditing and regulatory requirements in regulated industries. |

Apache Iceberg continues to set the standard in the best data lake providers for enterprise feature and pricing comparison, offering advanced features, flexible pricing, and proven use cases for modern data-driven organizations.

Comparison Tables of The Best Data Lake Providers For Enterprise

Feature table

Selecting the right data lake provider requires a clear understanding of each platform’s strengths. The following table presents a side-by-side comparison of core features for the leading enterprise solutions. This overview helps IT decision-makers quickly identify which provider aligns best with their organizational needs.

| Provider | Low-Code Integration | Real-Time Sync | ETL/ELT Support | API Integration | Multi-Cloud Support | Advanced Security | Data Governance | Schema Evolution | Time Travel | AI/ML Integration | User-Friendly Interface | Open Source |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FineDataLink | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | No | Yes | No |

| AWS | No | Yes | Yes | Yes | Yes | Yes | Yes | Limited | No | Yes | Moderate | No |

| Azure | No | Yes | Yes | Yes | Yes | Yes | Yes | Limited | No | Yes | Moderate | No |

| Google Cloud | No | Yes | Yes | Yes | Yes | Yes | Yes | Limited | No | Yes | Moderate | No |

| Snowflake | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No |

| Apache Iceberg | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | Moderate | Yes |

Note: FineDataLink stands out for its low-code platform, real-time synchronization, and user-friendly interface. Snowflake and Apache Iceberg offer advanced features like schema evolution and time travel, which support complex analytics and compliance needs.

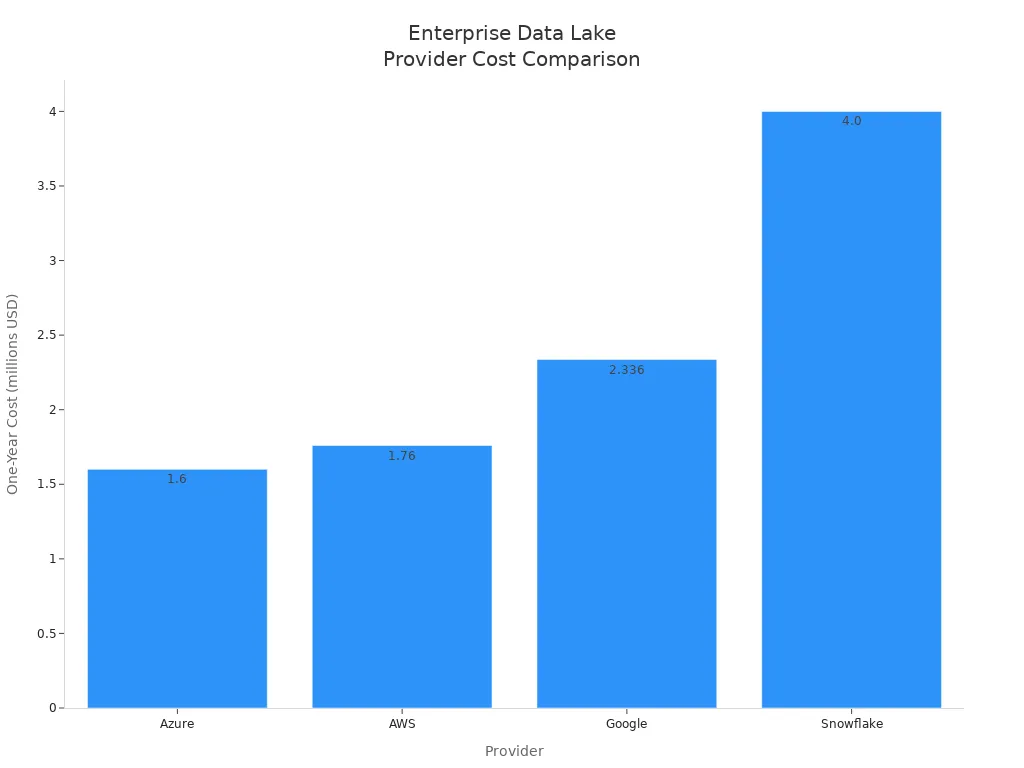

Pricing table

Cost remains a critical factor when evaluating the best data lake providers for enterprise. The following table compares the pricing models and estimated one-year costs for major platforms. This comparison provides a clear view of total cost of ownership, helping organizations plan their budgets effectively.

| Provider | Pricing Model | Free Trial | Estimated One-Year Cost (USD, millions) | Cost Comparison | Notes |

|---|---|---|---|---|---|

| FineDataLink | Subscription/Flexible | Yes | Custom | Competitive | Affordable, scalable, contact for quote |

| AWS | Pay-as-you-go/Tiered | No | 1.76 | 9.8% higher than Azure | Usage-based, discounts for high volume |

| Azure | Tiered/Reserved | No | 1.6 | Lowest cost | Multiple storage tiers, pay-per-job option |

| Google Cloud | Pay-as-you-go | No | 2.336 | 46% higher than Azure | Dynamic scaling, transparent billing |

| Snowflake | Usage-based | Yes | 4.0 | 2.5x higher than Azure | Compute and storage billed separately |

| Apache Iceberg | Open Source | N/A | Varies (infra only) | Depends on infra | No license fee, infra and support costs |

Organizations seeking the best data lake providers for enterprise should consider both feature sets and total cost of ownership. Azure offers the lowest estimated annual cost among the major cloud providers, while Snowflake’s advanced capabilities come at a premium. FineDataLink provides flexible pricing and a free trial, making it accessible for a range of business sizes. Apache Iceberg, as an open-source solution, allows for cost optimization but requires careful planning for infrastructure and support.

Choosing The Best Data Lake Providers For Enterprise

Scalability

Scalability remains a critical factor when evaluating the best data lake providers for enterprise. Organizations must ensure that their chosen platform can handle increasing data volumes and adapt to changing business needs. Providers such as AWS, Azure, Snowflake, and Google Cloud offer robust scalability features. AWS delivers fully managed ETL services with automated data discovery. Azure combines data warehousing and big data capabilities, scaling to petabytes. Snowflake separates compute and storage, enabling unlimited scaling across multiple cloud providers. Google Cloud utilizes a serverless architecture with automatic scaling for big data analytics.

| Platform | Scalability Features |

|---|---|

| AWS | Fully managed ETL service with automated data discovery and cataloging |

| Azure | Combines data warehousing and big data, scales to petabytes |

| Snowflake | Unlimited scaling, separates compute and storage |

| Google Cloud | Serverless architecture, automatic scaling for analytics |

Decision-makers should assess current and projected data growth to select a provider that supports seamless expansion.

Integration

Integration capabilities determine how easily a data lake platform connects with existing systems and applications. The best data lake providers for enterprise offer extensive integration options to support diverse environments. Amazon excels for organizations within the AWS ecosystem, providing rich integration and scalability. Microsoft delivers strong enterprise features and governance, ideal for regulated industries. Google emphasizes simplicity and advanced analytics integration. Snowflake blends data warehouse and data lake functionalities, ensuring a seamless experience. Cloudera suits organizations with complex regulatory requirements and on-premises infrastructure.

| Provider | Integration Capabilities | Target Audience |

|---|---|---|

| Amazon | Rich integration for AWS ecosystem | Small teams, enterprise deployments |

| Microsoft | Strong enterprise features, governance | Regulated industries, Azure users |

| Simple integration with analytics services | Advanced machine learning organizations | |

| Snowflake | Blends warehouse and lake functionalities | Seamless integration seekers |

| Cloudera | Complex regulatory, on-premises support | Hybrid infrastructure organizations |

Selecting a provider with robust integration ensures smooth data flow and minimizes disruption during deployment.

Security

Security and compliance standards play a vital role in provider selection. Enterprises must verify that platforms meet industry certifications and regulatory requirements. Leading providers support certifications such as FedRAMP Ready, HIPAA, SOC 2 Type II, GDPR, and PCI DSS. These standards protect sensitive information and ensure legal compliance. Providers like AWS, Azure, Google Cloud, and Snowflake maintain rigorous security controls, including encryption, access management, and audit logging.

| Certification/Standard | Description |

|---|---|

| FedRAMP Ready | Federal security requirements for cloud services |

| HIPAA | Protects patient health information |

| SOC 2 Type II | Audits for security and privacy controls |

| GDPR | EU personal data privacy law |

| PCI DSS | Secure management of credit card data |

Organizations should match provider certifications with their own compliance needs to safeguard data assets.

Budget

Budget considerations influence the final decision when choosing among the best data lake providers for enterprise. Enterprises must evaluate storage, compute, ETL pipeline, and licensing costs. Data storage can become expensive as volumes grow. Processing and compute resources for ETL jobs require careful management. Licensing for proprietary tools may add hidden expenses. Cost optimization strategies include storage tiering, auto-scaling, scheduling ETL jobs, and using open-source technologies.

- Evaluate data storage and scalability needs based on data volume, velocity, and variety.

- Understand costs associated with different storage options, including storage fees and potential egress fees.

- Assess vendor reputation and support for ongoing needs.

- Implement storage tiering to reduce costs for less-accessed data.

- Schedule ETL jobs to run only when necessary.

- Use columnar formats with compression to save space and improve performance.

Decision-makers should compare total cost of ownership and seek providers that offer transparent pricing and cost-saving features.

The best data lake providers for enterprise feature and pricing comparison offer a range of strengths. FineDataLink excels in low-code integration and real-time sync. AWS, Azure, and Google Cloud provide robust scalability and security. Snowflake and Apache Iceberg deliver advanced analytics and governance. Decision-makers should match provider features to business needs. > Careful assessment of scalability, integration, security, and budget ensures the right choice for enterprise data management.

Continue Reading About The Best Data Lake Providers For Enterprise

Enterprise Data Integration: A Comprehensive Guide

What is enterprise data and why does it matter for organizations

Understanding Enterprise Data Centers in 2025

Enterprise Data Analytics Explained for Modern Businesses

FAQ

The Author

Howard

Data Management Engineer & Data Research Expert at FanRuan

Related Articles

What is a data management platform in 2025

A data management platform in 2025 centralizes, organizes, and activates business data, enabling smarter decisions and real-time insights across industries.

Howard

Dec 22, 2025

Top 10 Database Management Tools for 2025

See the top 10 database management tools for 2025, comparing features, security, and scalability to help you choose the right solution for your business.

Howard

Dec 17, 2025

Best Data Lake Vendors For Enterprise Needs

Compare top data lake vendors for enterprise needs. See which platforms offer the best scalability, integration, and security for your business.

Howard

Dec 07, 2025